ChatGPT has experienced remarkable growth since its public release in November 2022. It has become an essential tool for many businesses and individuals, but as the technology becomes increasingly integrated into our daily lives, it’s natural to wonder: is ChatGPT safe to use?

ChatGPT is generally considered safe to use thanks to the numerous security measures, data handling practices, and privacy policies implemented by its developers. However, like any technology, ChatGPT is not immune to security concerns and vulnerabilities.

This article will help you to better understand the safety of ChatGPT and AI language models. We will explore various aspects, such as data confidentiality, user privacy, potential risks, AI regulation, and safety measures.

By the end, you’ll gain a deeper understanding of ChatGPT’s safety and be better equipped to make informed decisions when using this powerful large language model.

Let’s get started!

Is ChatGPT Safe to Use?

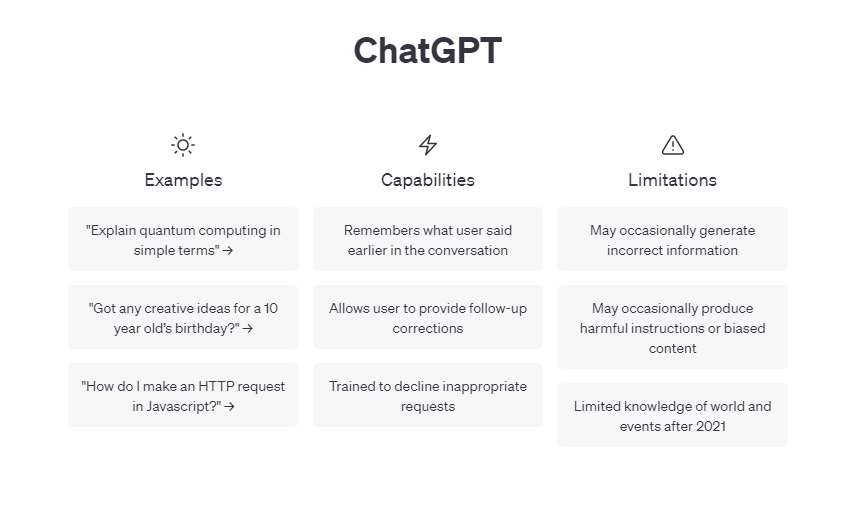

Yes, Chat GPT is safe to use. The AI chatbot and its generative pre-trained transformer (GPT) architecture were developed by Open AI to safely generate natural language responses and high quality content in such a way that it sounds human-like.

OpenAI has implemented robust security measures and data handling practices to ensure user safety. Let’s break that down:

1. Security Measures

ChatGPT’s ability to generate natural language responses is undeniably impressive, but how secure is it? Here are examples of some measures from Open AI’s security page:

Encryption: Chat GPT servers use encryption both at rest and in transit to protect user data from unauthorized access. Your data is encrypted when stored and while it is being transferred between systems.

Access controls: OpenAI implements strict access control mechanisms to ensure that only authorized personnel can access sensitive user data. This includes the use of authentication and authorization protocols, as well as role-based access controls.

External security audits: The OpenAI API is annually audited by an external third party to identify and address potential vulnerabilities in the system. This helps to ensure that security measures remain up-to-date and effective in protecting user data.

Bug bounty program: In addition to the regular audits, OpenAI has created a Bug Bounty Program to encourage ethical hackers, security research scientists, and tech enthusiasts to identify and report security vulnerabilities.

Incident response plans: OpenAI has established incident response plans to manage and communicate security breaches effectively, should they occur. These plans help to minimize the impact of any potential breach and ensure a swift resolution.

While specific technical details of OpenAI’s security measures are not publicly disclosed to maintain their effectiveness, these examples show the firm’s commitment to user data protection and the safety of Chat GPT.

2. Data Handling Practices

OpenAI uses your conversational data to improve ChatGPT’s natural language processing ability. It follows responsible data handling practices to maintain user trust, such as:

Purpose of data collection: Anything you input to Chat GPT is collected and saved on OpenAI servers to refine the system’s natural language processing. OpenAI is transparent about what it collects and why. It uses user data primarily for language model training and improvement and enhancing the overall user experience.

Data storage and retention: OpenAI securely stores user data and adheres to strict data retention policies. Data is retained only for as long as necessary to fulfill its intended purpose. After the retention period, the data is either anonymized or deleted to protect user privacy.

Data sharing and third-party involvement: Your data will be shared with third parties only with your consent or under specific circumstances, such as legal obligations. OpenAI will ensure that third parties involved in data processing adhere to similar data handling practices and privacy standards.

Compliance with regulations: OpenAI complies with regional data protection regulations in the EU, California, and other places. This compliance ensures that their data handling practices meet the required legal standards for user privacy and data protection.

User rights and control: You can expect that OpenAI will respect your rights concerning your data. The firm provides users with an easy way to access, modify, or delete their personal information.

OpenAI seems committed to to protecting user data, but even with these safeguards in place, you should never share sensitive information with Chat GPT because no system can guarantee absolute security.

Lack of confidentially is big concern when using Chat GPT, and that’s what we’ll cover in detail in the next section.

Is Chat GPT Confidential?

No, Chat GPT is not confidential. Chat GPT logs every conversation, including any personal data you share, and will use it as training data.

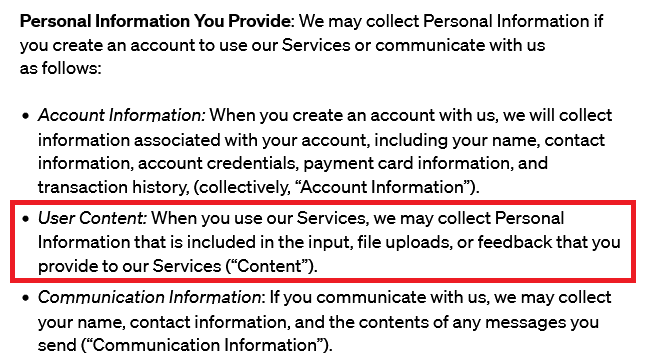

Open AI’s privacy policy states that the company collects personal information included in “input, file uploads, or feedback” users provide to Chat GPT and its other services.

The company’s FAQ explicitly states that it will use your conversations to improve its AI language models and that your chats may be reviewed by human AI trainers.

It also states that OpenAI can’t delete specific prompts from your history, so don’t share personal or sensitive information with Chat GPT.

The consequences of oversharing were highlighted in April 2023, when local Korean media reported (NB: the story is in Korean, but cut and paste it into, you guessed it, Chat GPT and get it to translate it for you) that Samsung employees leaked sensitive information to Chat GPT on at least three separate occasions.

According to the news source, two employees entered sensitive program code into Chat GPT for solutions and code optimization, and one employee pasted a transcript of a corporate meeting and requested minutes.

In response to the incidents, Samsung announced that it was developing safety measures to prevent further leaks through Chat GPT, and it may consider banning Chat GPT from the company network if similar incidents occur.

The good news is that Chat GPT does provide a way to remove your chats, and you can set it up so that it doesn’t save your history in the first place. We take a look at how in the next two sections.

Steps to Delete Your Chats on ChatGPT

To delete your conversations on ChatGPT, follow these steps.

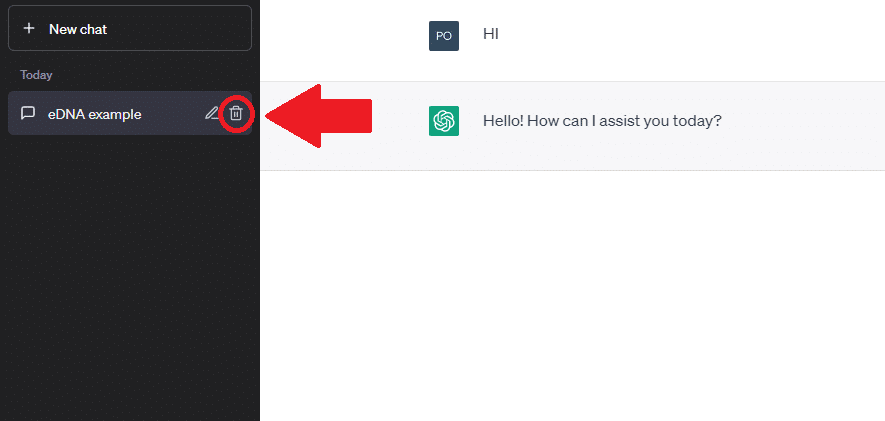

Step 1. Choose the conversation you want to delete from your chat log and click on the bin icon to delete it

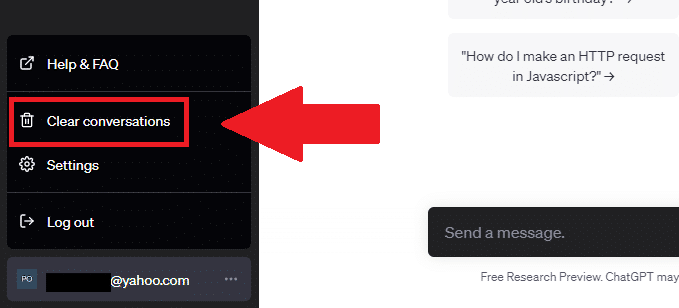

Step 2. To bulk delete your conversations, click on the three dots next to your email address in the bottom-left corner and pick “Clear conversations” from the menu

Voila! Your chats are no more! You’ll no longer see them and ChatGPT will purge them from its system within 30 days.

Steps to Stop ChatGPT from Saving Your Chats

If you want to stop ChatGPT from saving your chats by default, follow these steps.

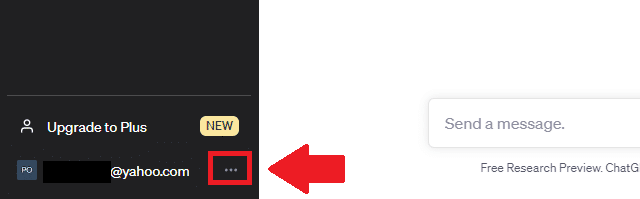

Step 1. Open the settings menu by clicking on the three dots next to your email address

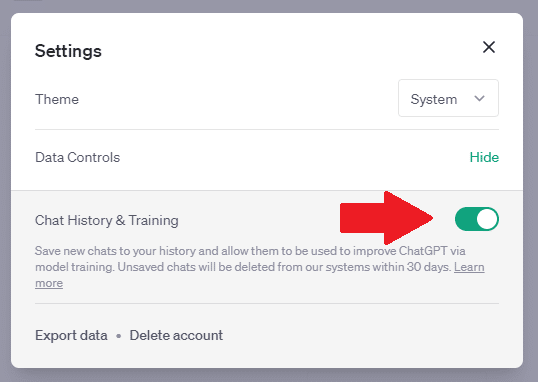

Step 2. Under Data Controls, turn off the toggle for “Chat History & Training”

Once unchecked, ChatGPT will no longer save news chats to your history and it won’t use them for model training. Unsaved conversations will be deleted from the system within a month.

Now that you know how to delete your chats and stop ChatGPT from saving them by default, let’s take a look at some of the potential risks of using ChatGPT in the next section.

What Are the Potential Risks of Using ChatGPT?

As you evaluate the safety of language model-trained chatbots, it’s important to consider the risks that both businesses and individuals may face.

Some key security concerns may include data breaches, unauthorized access to private information, and biased and inaccurate information.

1. Data Breaches

Data breaches are a potential risk when using any online service, including ChatGPT.

You can’t download Chat GPT, so you must access it through web browsers. In that context, a data breach could occur if an unauthorized party gains access to your conversation logs, user information, or other sensitive data.

This could have several consequences:

Privacy compromises: If a data breach occurs, your private conversations, personal information, or sensitive data could be exposed to unauthorized individuals or entities, compromising your privacy.

Identity theft: Cybercriminals could potentially use the exposed personal information for identity theft or other fraudulent activities, causing financial and reputational harm to affected users.

Misuse of data: In a data breach, user data could be sold or shared with malicious parties who might use the information for targeted advertising, disinformation campaigns, or other nefarious purposes.

OpenAI seems to take cyber security seriously and has implemented various security measures to minimize the risk of data breaches.

However, no system is completely immune to vulnerabilities, and the reality is that most breaches are caused by human error rather than technical failures.

2. Unauthorized Access of Confidential Information

If employees or individuals enter sensitive business information — including passwords or trade secrets — into ChatGPT, there is a chance that this data could be intercepted or exploited by bad actors.

To protect yourself and your business, consider coming up with a company-wide policy for the use of generative AI technologies.

Several big companies have already issued warnings to employees. Walmart and Amazon, for example, have reportedly told workers not to share confidential information with AI. Others, like J.P. Morgan Chase and Verizon, have outright banned the use of ChatGPT.

3. Biased and Inaccurate Information

Another risk that comes with using ChatGPT is the potential for biased or inaccurate information.

Due to the vast range of data it has been trained on, the AI model might inadvertently generate responses that contain false information or reflect existing biases within the data.

This could cause issues for businesses that rely on AI-generated content for decision-making or communication with customers.

It is up to you to critically evaluate the information provided by ChatGPT to guard against misinformation and prevent the spread of biased content.

As you’ll see in the next section, there are currently no direct regulations in place to minimize the negative impact of generative AI tools like ChatGPT.

Are There Regulations for ChatGPT and Other AI Systems?

There are currently no specific regulations that directly govern ChatGPT or other artificial intelligence systems.

However, AI technologies, including ChatGPT, are subject to existing data protection and privacy regulations in various jurisdictions. Some of these regulations include:

General Data Protection Regulation (GDPR): The GDPR is a comprehensive data protection regulation that applies to organizations operating within the European Union (EU) or processing personal data of EU residents. It addresses data protection, privacy, and the rights of individuals regarding their personal data.

California Consumer Privacy Act (CCPA): The CCPA is a data privacy regulation in California that provides consumers with specific rights concerning their personal information. It requires businesses to disclose their data collection and sharing practices and allows consumers to opt out of the sale of their personal information.

Other regional regulations: Various countries and regions have enacted data protection and privacy laws that may apply to AI systems like ChatGPT. Examples include the Personal Data Protection Act (PDPA) in Singapore and the Lei Geral de Proteção de Dados (LGPD) in Brazil. Italy banned ChatGPT in March 2023 over privacy concerns but lifted the ban a month later after OpenAI added new safety features.

The lack of specific regulations targeting AI systems like ChatGPT directly may be remedied soon. In April 2023, EU lawmakers passed a draft of the AI Act, a bill that would require firms developing generative AI technologies like ChatGPT to reveal copyrighted content used in their development.

The proposed legislation will categorize AI tools based on their risk level, ranging from minimal to limited, high, and unacceptable.

Key concerns include biometric surveillance, dissemination of misinformation, and discriminatory language. While high-risk tools won’t be prohibited, their usage will demand high levels of transparency.

If passed, the AI Act will become the world’s first comprehensive regulation for artificial intelligence. But until such regulations are passed, it is your responsibility to protect your privacy when using Chat GPT.

In the next section, we take a look at some safety measures and best practices for using ChatGPT.

ChatGPT Safety Measures and Best Practices

OpenAI has implemented several safety measures to protect user data and ensure the AI system’s security, but users should also adopt certain best practices to minimize risks while interacting with ChatGPT.

This section will explore some of the best practices you should follow.

Limit sensitive information: Once again, avoid sharing personal or sensitive information in your conversations with ChatGPT.

Review privacy policies: Before using a ChatGPT-powered app or any service that uses OpenAI language models, carefully review the platform’s privacy policy and data handling practices to gain insight into how your conversations are stored and used by the platform.

Use anonymous or pseudonymous accounts: If possible, use anonymous or pseudonymous accounts when interacting with ChatGPT or products that use the ChatGPT API. This can help minimize the association of conversation data with your real identity.

Monitor data retention policies: Familiarize yourself with the data retention policies of the platform or service you use to understand how long your conversations are stored before they are anonymized or deleted.

Stay informed: Keep up-to-date with any changes to OpenAI’s security measures or privacy policies and adjust your practices accordingly to maintain a high level of safety while using ChatGPT.

By understanding the safety measures implemented by OpenAI and following these best practices, you can minimize potential risks and enjoy a more secure experience when interacting with ChatGPT!

Our Final Thoughts on Using ChatGPT Safely

Safely using ChatGPT is a shared responsibility between the developers at OpenAI and the users who interact with the AI system. OpenAI has taken significant steps to implement robust security measures, data handling practices, and privacy policies to ensure a secure experience for users.

However, users must also exercise caution and adopt best practices to protect their privacy and personal information while engaging with language models.

By limiting the sharing of sensitive information, reviewing privacy policies, using anonymous accounts, monitoring data retention policies, and staying informed about any changes to security measures, you can enjoy the benefits of ChatGPT while minimizing potential risks.

AI technologies are undoubtedly going to be increasingly integrated into our daily lives, so it’s up to you to prioritize your safety and privacy as you interact with these powerful tools.

Are you a Microsoft Outlook user? Check out our video below, it shows you how to integrate Chat GPT with Outlook, it’s easy!