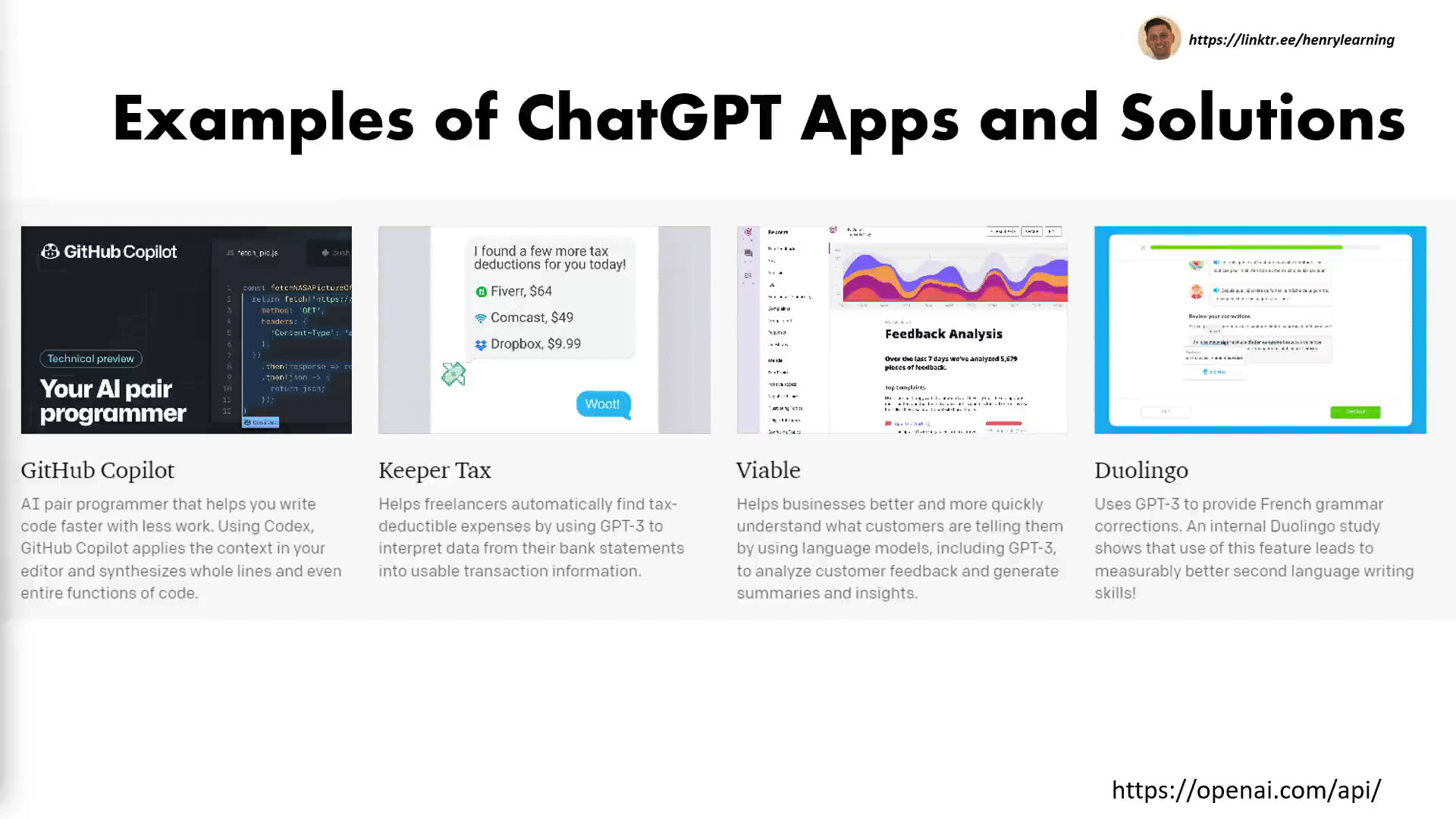

Since its explosive introduction in November 2022, ChatGPT has been added to many apps and services, including Snapchat, Duolingo, Shopify, and more. These integrations are made possible by ChatGPT’s application programming interface (API).

The ChatGPT API is a tool that enables you to integrate the power of ChatGPT into your applications, products, or services. It gives you access to ChatGPT’s ability to generate human-like responses to questions and engage in casual conversation.

In this article, we’re going to cover everything you need to know about the ChatGPT API, including what it can do, how much it costs, how to access it, and some popular use cases.

If you’re excited about the possibilities of the ChatGPT API, then you’re in the right place.

Let’s get started!

What Is ChatGPT API?

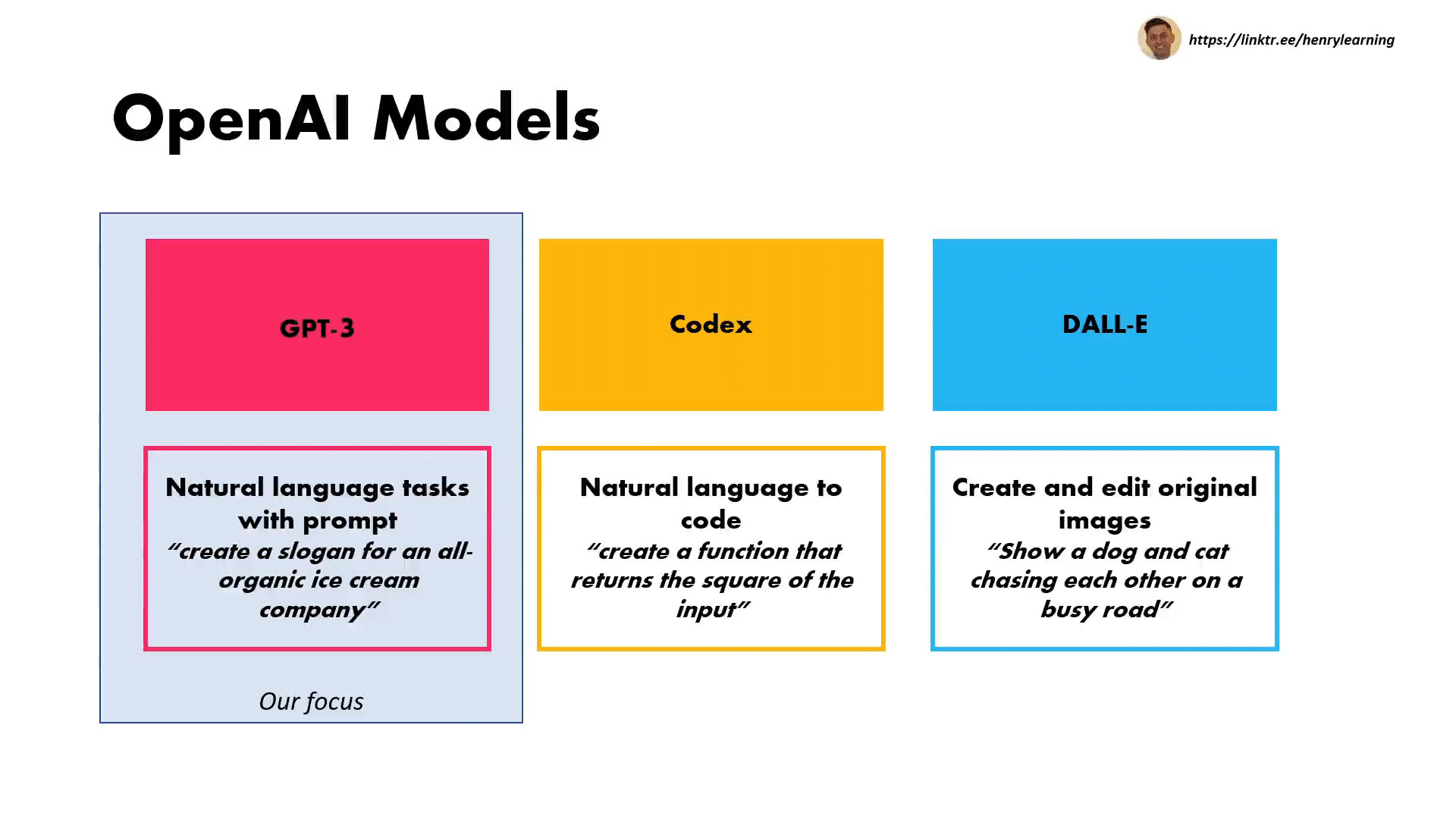

OpenAI, the company behind ChatGPT, has released an API that enables developers to integrate the advanced language processing capabilities of ChatGPT into their apps, products, and services.

ChatGPT, or Chat-based Generative Pre-trAIned Transformer, is an artificial intelligence (AI) chatbot built on a family of large language models that were fine-tuned by human AI trainers using vast amounts of training data and human feedback.

With an OpenAI API, you can harness the benefits of ChatGPT’s advanced AI technology to improve user experiences and offer personalized recommendations and interactive assistance that reads like human-generated text.

One popular implementation of the OpenAI API is AI shopping assistants that make recommendations for shoppers based on their requests.

Shopify, for example, has added ChatGPT into its Shop app so that customers can use prompts to identify and find items they want.

In a tweet announcing the integration, Shop said the ChatGPT-fueled assistant can “talk products, trends, and maybe even the meaning of life.”

The OpenAI API enables seamless interaction with different GPT models through its dedicated interface.

As a developer, you can use the OpenAI API to build applications using various programming languages that can generate text, hold intelligent and engaging conversations with end-users, and provide meaningful responses.

Ready to Learn ChatGPT Tips, Tricks & Hacks?

Join 43,428+ others on our exclusive ChatGPT mailing list; enter your details below.

[wpforms id=”211279″]

How Much Does the ChatGPT API Cost?

OpenAI’s most powerful API, called text-davinci-003, costs $0.02 per 1,000 tokens. One token represents a sequence of text, with 1,000 tokens representing around 750 words.

In the blog post from March 2023, OpenAI said thanks to some system optimizations, it had managed to reduce the cost of ChatGPT by 90% since December 2022, and it was passing on those savings to API users via the newly released gpt-3.5-turbo model.

The gpt-3.5-turbo model is optimized for dialogue and is 10 times cheaper ($0.002 per 1,000 tokens) than its initial model, text-davinci-003.

The cost savings mean developers can comfortably integrate the API into their apps even on a budget. It’s a compelling enough reason to explore ChatGPT’s capabilities!

In the next section, we take a look at the different ChatGPT AI models you can access using the API.

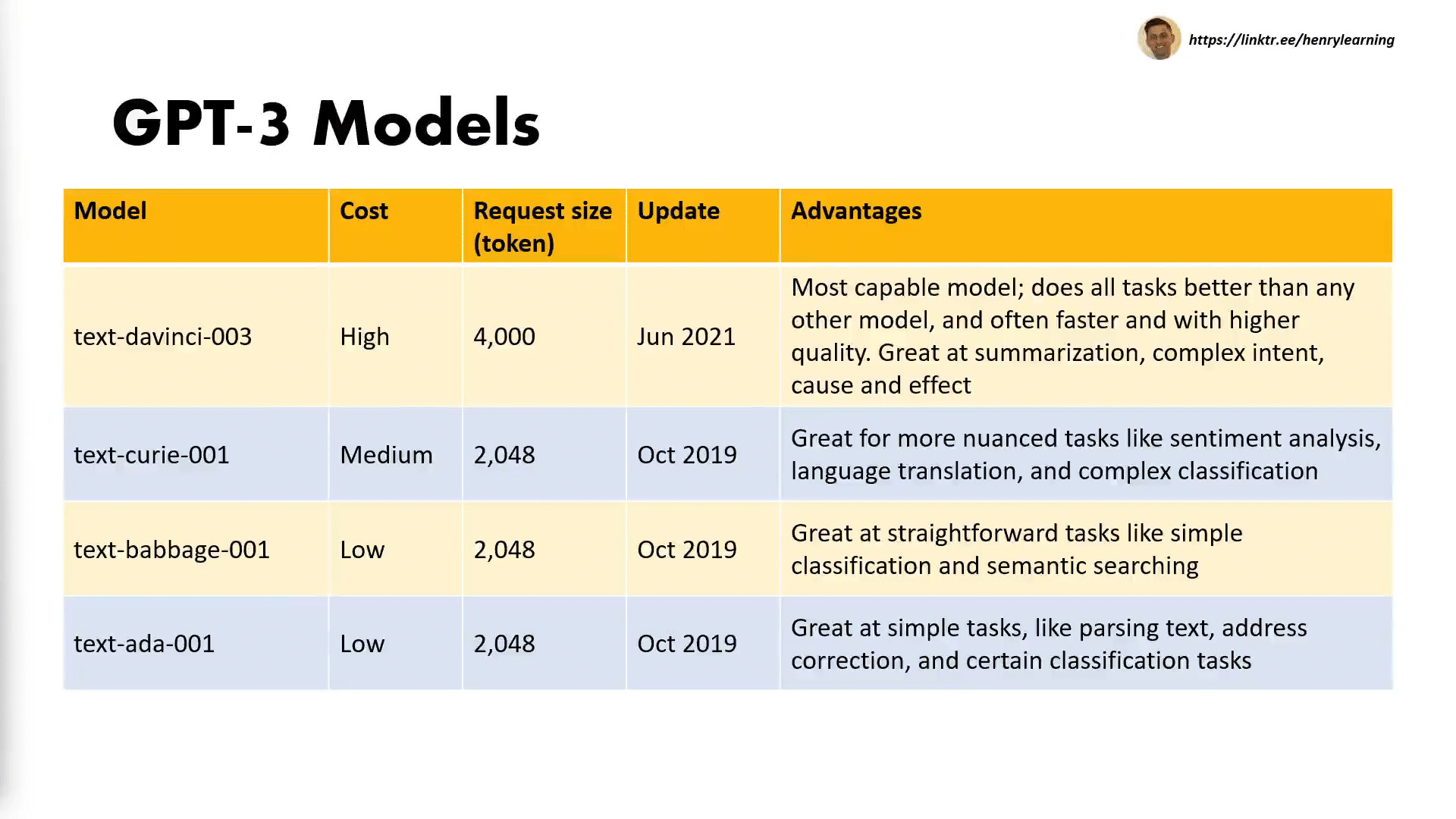

4 GPT-3 Models You Can Access with the ChatGPT API

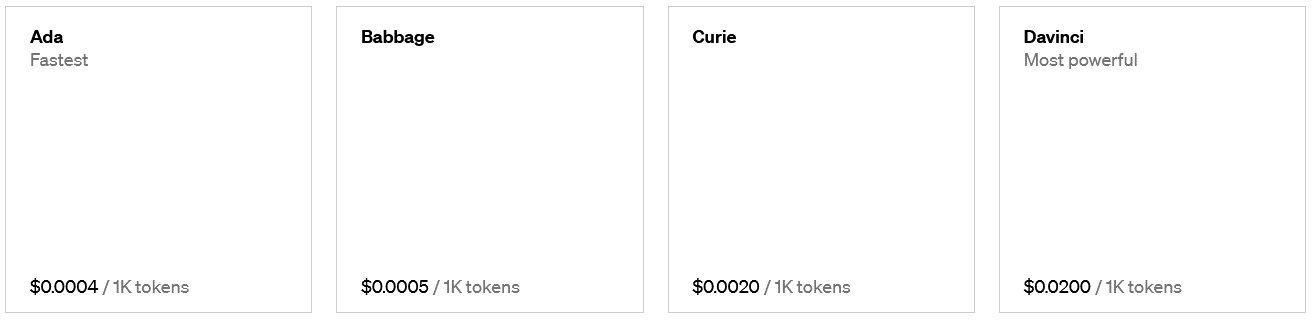

GPT-3 API has several iterations and is available as four engines — Davinci, Curie, Babbage, and Ada — each tailored to cater to specific tasks with different usage limits.

This section explores the features and strengths of each engine so you can maximize the potential of GPT-3 in your applications.

1. Davinci

The Davinci engine is the most powerful. It’s designed to handle intricate tasks such as:

Crafting imaginative fiction

Composing poetry

Creating screenplays

It can generate highly inventive and subtle responses, making it suitable for tasks demanding an artistic flair, such as marketing content creation.

Best suited for: Tasks like short story writing, poetry composition, or screenplay development.

Cost: $0.02 per 1,000 tokens

2. Curie

The Curie engine is a ChatGPT model that’s powerful but not as powerful as Davinci. It’s intended for straightforward and specific tasks like:

Responding to inquiries and user feedback

Delivering customer support

Generating summaries and translations

It excels in offering concise, accurate, and direct answers to questions.

Best suited for: Customer service-related tasks where prompt and precise responses are expected. Curie is also suitable for summarizing text data such as lengthy articles or documents and providing translations.

Cost: $0.002 per 1,000 tokens

3. Babbage

The Babbage engine is a fast ChatGPT model that’s tailored for tasks associated with:

Data analysis

Business intelligence

It can generate text and evaluate data sets, produce visualizations, and supply insights and recommendations based on your data collection setup.

Best suited for: Baggage is ideal for businesses and organizations requiring data analysis and business intelligence support, as well as researchers and analysts seeking assistance with their data.

Cost: $0.0005 per 1,000 tokens

4. Ada

The Ada engine is the fastest one of the bunch. It’s designed for tasks concerning:

Programming

Software development

It can write code, debug errors, and clarify programming concepts.

Best suited for: Ada is ideal for developers and programmers who need help with their code, or for students studying programming who need clarification on specific concepts.

Cost: $0.0004 per 1,000 tokens

The four GPT-3 models excel in different ways and some have very slight differences. The Davinci engine excels in more complex tasks requiring creative and nuanced responses, while the Curie engine is optimal for simple and direct tasks like answering questions and offering customer support.

The Ada engine is a fast one and is tailored for programming and software development tasks, while the Babbage engine is perfect for data analysis and business intelligence-related activities.

Want to see them in action? OpenAI released a test playground where developers can try out different inputs and compare outputs using different GPT models.

In the next section, we take a look at OpenAI’s most advanced language model yet: GPT-4.

Does GPT-4 Have an API?

Yes, GPT-4 does have an API. The current available model is gpt-4-0314. It’s the most advanced iteration of Chat GPT that was released in March 2023.

GPT-4 gained a lot of attention because of its enhanced capabilities. For instance, the GPT-3.5 and GPT-3 language models can sometimes give nonsensical answers or false positives. The GPT-3 model in particular occasionally writes plausible-sounding content that reads like human-generated text but is actually misinformation.

The GPT-4 language model has broader general knowledge and is capable of solving harder problems with greater accuracy. It can also generate responses much faster and work with more content.

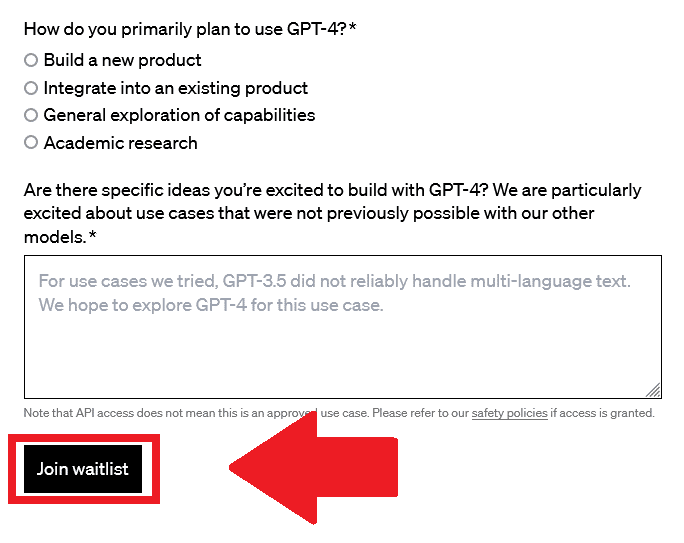

The GPT-4 API is still in a limited beta phase and usage is not available to the public. Users who want to access the GPT-4 model must join a waitlist. Below is how to do that.

Step 1. Go to the waitlist page at https://openai.com/waitliess/gpt-4-api

Step 2. Explain why you’re interested in GPT-4 and accurately provide all of the required information

Once you’ve provided all the required information, click the “Join Waitlist” button at the bottom of the page. OpenAI will contact you once your application gets approval.

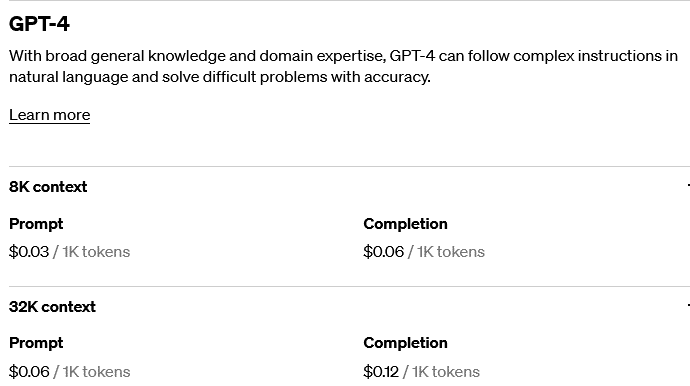

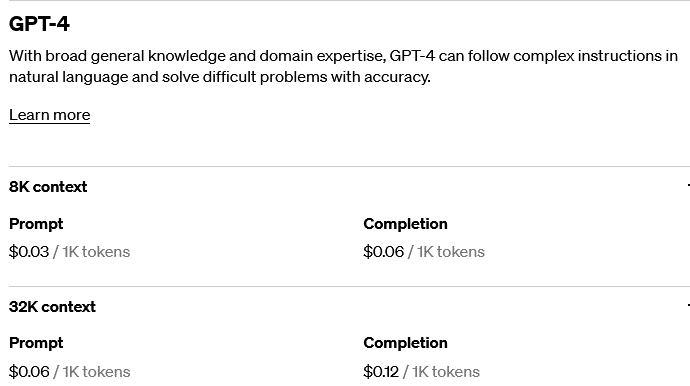

Pricing for Chat GPT-4 API

The GPT-4 API is 15 times more expensive than the gpt-3.5-turbo model, which makes sense considering it is the most advanced iteration of ChatGPT and can handle significantly more context with much larger parameters.

Let’s break down what that means!

How Context Size Affects GPT-4 API Pricing

In ChatGPT, the term “context” refers to the previous messages or information that have been exchanged between the model and the user during a conversation.

ChatGPT relies on the context of the conversation to understand the user’s input and generate relevant responses. Context includes not only the most recent message but also the preceding messages, which help ChatGPT to maintain coherence and continuity in the conversation.

For gpt-4-0314, the cost per 1,000 tokens for 8K context (8,000 words) is:

$0.03 for a prompt request (input)

$0.06 for a completion response (output)

The cost for 1,000 tokens for 32K context (32,000 words) is:

$0.06 per 1,000 tokens for a prompt request

$0.12 per 1,000 tokens for a completion response

The API version that can handle up to 32K context is the right choice for a service that is required to generate a lot of text, like a platform for generating blog posts.

However, if your service or product is simpler, like an AI assistant that is expected to give short but concise responses, then the version with 8K context is the better deal.

GPT-4 Has Higher Parameters

Parameters” in this context refer to the size and complexity of the artificial neural network that underlies a ChatGPT model.

Parameters are the adjustable elements of the neural network that enable it to learn patterns and generate human-like text. They are numerical values that are adjusted during the training process as the model learns to generate text based on the input data it receives.

The more parameters a model has, the more complex and powerful it is. GPT-3.5, for example, has 175 billion parameters and is capable of learning and representing a vast amount of information.

OpenAI hasn’t stated the parameters for the GPT-4 model, but it is rumored to have four times more parameters than GPT-3.5, or 1 trillion parameters.

A larger number of parameters generally leads to better performance and more accurate text generation, as the model has more capacity to learn and represent complex relationships in the data.

However, as the number of parameters increases, so do the computational requirements for training and using the model, making it more resource-intensive — hence the base price tag for the GPT-4 API that’s 15 times more expensive than gpt-3.5-turbo.

Now that we’ve covered the various API models available from OpenAI, let’s take a look at how you can generate a ChatGPT API key in the next section.

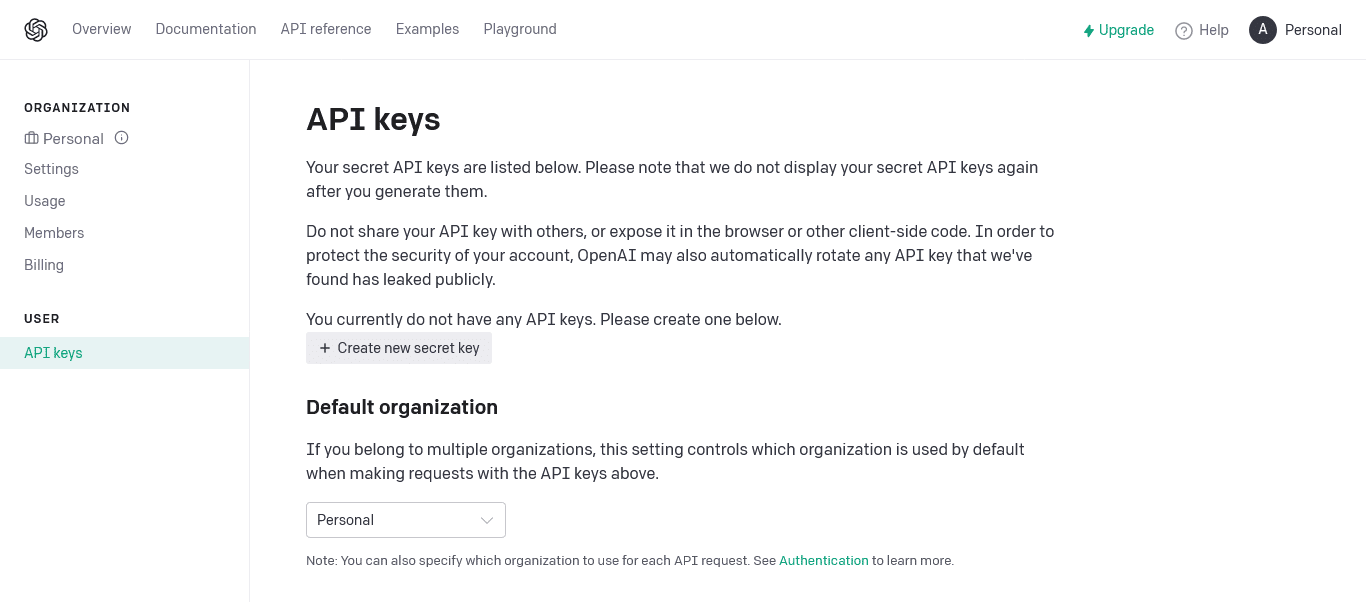

How Can I Get an API Key for ChatGPT?

In this section, you’ll learn how to get started with OpenAI’s API. Follow the steps carefully to ensure seamless integration of ChatGPT into your application.

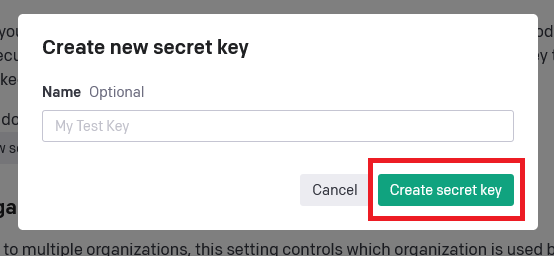

Step 1. Sign in to your ChatGPT account and go to the API keys page and click the “Create new secret key” button

Step 2. In the dialogue window, enter the name of your new key then press “Create secret key”

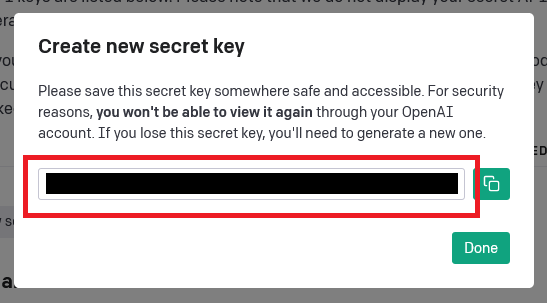

Step 3. Copy and save the generated API key somewhere secure

Note: Once generated, you won’t be able to view your API key again through your OpenAI account, so make sure to save it somewhere safe and secure.

The API key is what your app or service will use to make requests to ChatGPT servers. If you’d like to learn more about how to set up a ChatGPT API, check out the Basics of the ChatGPT API course on Enterprise DNA.

Next, we’re going to take a look at some popular use cases for the ChatGPT API.

Top 5 Use Cases of the ChatGPT API

The ChatGPT API offers a wide range of use cases across various industries. Here are a few examples of how businesses and developers can leverage this technology:

Customer Support: Enhance your helpdesk or customer service platform by integrating the ChatGPT API to create chatbot applications. Your AI assistant can understand and respond to user queries, reducing response times and increasing customer satisfaction.

Content Generation: Utilize the API to write a blog post, social media content, and other written materials and text data. It can assist you in drafting creative and engaging content by providing suggestions or completing sentences in a human-like manner.

Entity Extraction: Streamline data processing tasks with the API, as it can recognize and extract entities from text. This feature can help you organize and categorize information more efficiently.

Natural Language Interfaces: Enhance your applications, services, or websites with a conversational interface powered by the ChatGPT API. Users can interact with your product using natural language and receive model-written suggestions that make their experience more intuitive and enjoyable.

Don’t let any of the above examples limit you! You’re free to explore other innovative ways to utilize and fine-tune the ChatGPT API in your specific industry or application.

The Bottom Line on the ChatGPT API

The ChatGPT API is a powerful tool that has the potential to transform the way you build and integrate AI-powered language processing into your applications and AI systems.

By wielding the power of ChatGPT, you can create more advanced and sophisticated solutions across a wide range of use cases, from chatbots and virtual assistants to sentiment analysis and content generation. The API is a versatile tool for interacting with ChatGPT’s various models.

With its different models and flexible pricing options, the API is accessible to developers of all levels and industries. You can get started right away by acquiring an API key from your OpenAI account.

Whether you are a seasoned developer or just starting out, the ChatGPT API has something to offer. So go ahead, unleash your creativity, and let ChatGPT do the heavy lifting for you!

If you’d like to learn more about how to integrate ChatGPT into applications, check out our latest course Basics of The ChatGPT API