Are you still copying and pasting when you need to bring data from a website to Excel?

Well, you don’t have to do that anymore!

This article will show you easy and accurate methods for importing website data into Excel.

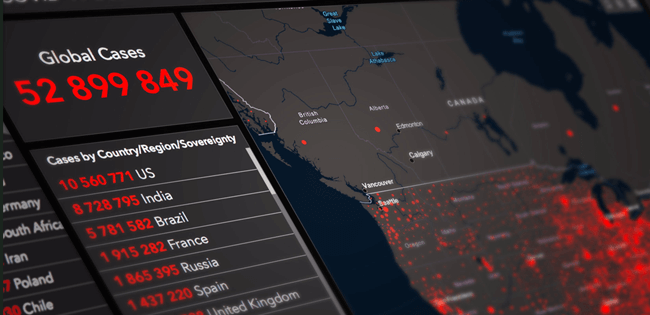

Yes, Excel can pull data from a website. The easiest way is to use Power Query. It’s a tool in Excel (from 2016 onwards) that lets you link up with different online places, like websites, databases, and social media sites. Once you’re connected, you can pull in the external data.

Apart from the above method, there are other tools like Octoparse that don’t require coding to pull data from websites into Excel.

Alternatively, you can use VBA codes for data scraping if you’re comfortable with coding.

Regardless of the method you choose, importing data from websites into Excel can be a real-time-saver. This will free you up to concentrate on analyzing the data and making informed decisions.

Now, let’s run through the process.

How to Load Website Data into Excel?

To load data from a web page into Excel, you can use the web data extraction methods below.

Using Power Query

Using Excel VBA

Using Web Scraping Tools

Now, let’s explore how to use the above methods for scraping data from web pages.

1. Using Power Query to Import Data

In this section, we’ll discuss the steps to use the Power Query tool in Excel.

Step 1

Open a new Microsoft Excel workbook on your computer.

Step 2

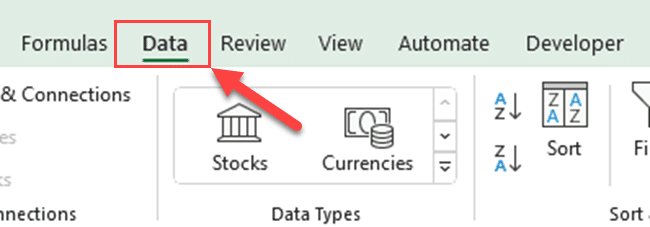

Go to the “Data” tab.

Step 3

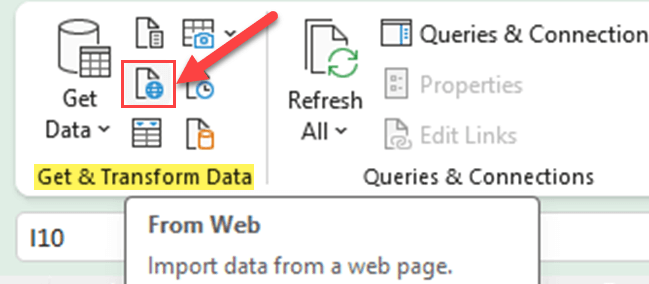

Go to the “Get & Transform Data” group and click “From Web”.

Step 4

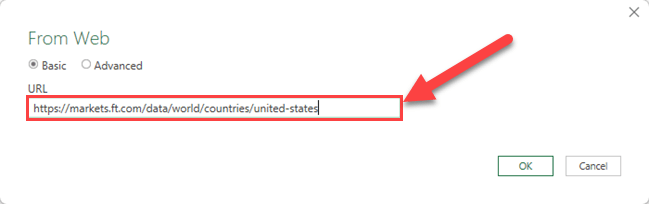

A new dialog box will appear, asking you to enter the web address (URL) of the website you’d like to extract data.

Type or paste the relevant URL into the text field, and then click “OK”.

Step 5

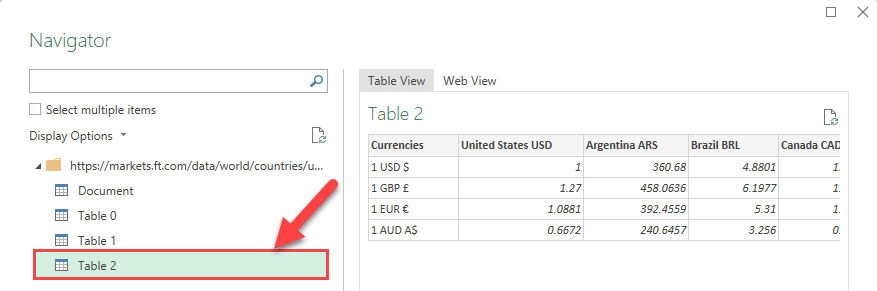

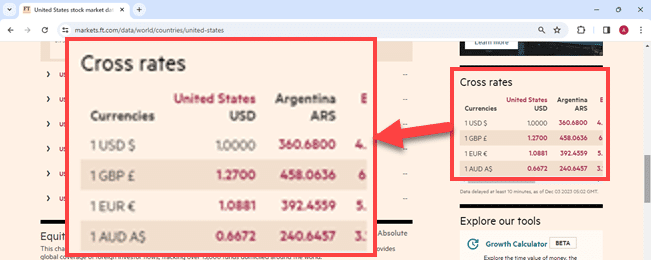

Excel will display the data it has identified on the specified website.

Go through the available tables and elements, selecting the ones you’d like to load into Excel.

Step 5

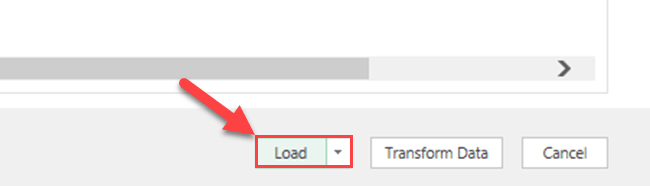

Once you select the desired table, click “Load”.

Now, you have scraped data from the below website data table to your existing worksheet.

By following these steps, you can successfully load website data extraction into your Excel workbook.

This method allows you to present and analyze web data more efficiently, enhancing the productivity of your overall data analysis.

Regular Updating of Web Data

Keeping web data up-to-date in your Excel worksheets is crucial to effectively track and analyze the information. Fortunately, Excel offers multiple ways to regularly update data from websites without repeating the same process over and over.

In order to keep the data updated, you can use the below methods.

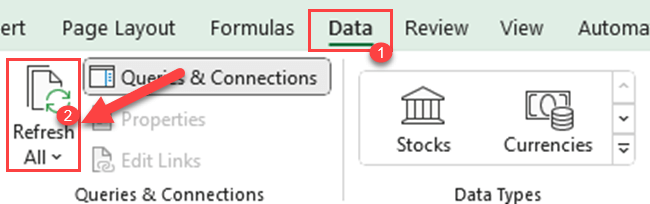

Use the “Refresh All” command

Set automatic updates within fixed time intervals

Use the “Refresh All” command

This command can be accessed through the Data tab by clicking on the “Refresh All” button in the “Queries & Connections” group, or by using the keyboard shortcut Ctrl + Alt + F5.

Set Automatic Updates Within Fixed Time Intervals

Moreover, you can set automatic updates within fixed time intervals.

To do this, follow these steps:

Step 1

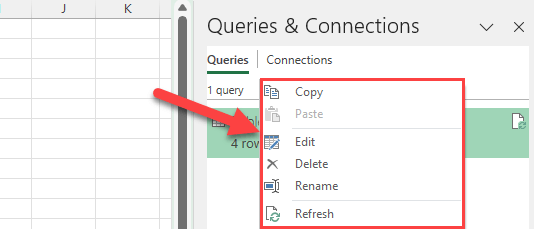

Right-click on the query in the Workbook Queries pane.

Step 2

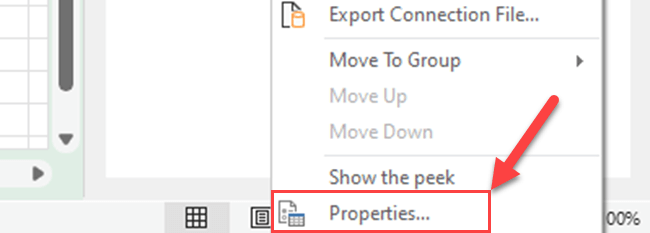

Choose “Properties” from the context menu.

Step 3

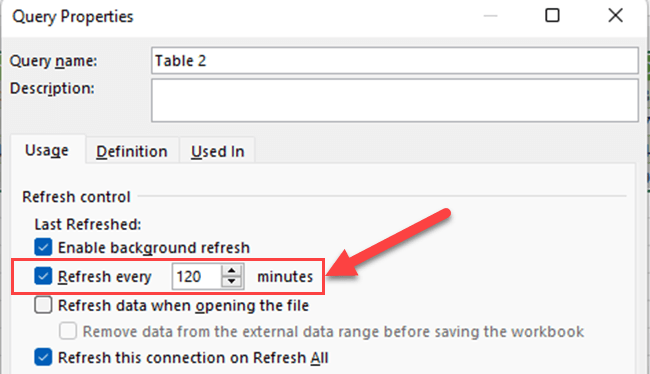

In the “Query Properties” dialog box, check the box that says “Refresh every”.

Then, set the desired time interval (in minutes) for automatic updates.

Note that having the workbook open while setting up these updates ensures seamless data updating without any manual intervention.

By implementing these updating methods, you ensure your Excel worksheet stays current with the latest web data, enabling you to make informed decisions and manage the information efficiently.

Working with Secure Websites

When working with secure websites, it’s crucial to consider the authentication process in order to pull data into Excel. Many websites require login credentials or utilize other security measures to protect their data.

The good news is that Excel has tools and features to help you access and extract data from such sites.

If the website requires authentication, you’ll need to provide your login credentials to proceed.

In cases where the From Web feature encounters difficulties accessing password-protected sites, you may use the legacy “From Web” method to pull data without entering credentials each time. This method involves enabling legacy features and creating a .xlsm template for ease of use.

Remember, when extracting data from secure websites, respecting privacy and data protection laws is important. Ensure you have the appropriate permissions to access and use the data in question.

By following these steps, you can confidently and ethically extract data from secure websites directly into Excel.

Now, let’s look at how we could use VBA.

2. Using Excel VBA

Visual Basic for Applications (VBA) allows you to pull data from a website directly into Excel. It is an integral part of the Microsoft Office suite and offers a helpful means of automating various tasks, such as data extraction from websites.

To retrieve data from a website using VBA, you can make use of

Web queries and

Browser automation techniques

Web queries are a built-in Excel feature that allows you to import data from a website’s table into your spreadsheet. This method, however, has limitations when it comes to handling sites with multiple pages, complex structures, or the need to enter login credentials.

On the other hand, browser automation techniques give you more power and flexibility.

By leveraging VBA’s ability to control web browsers (such as Internet Explorer), you can navigate websites, interact with page elements, and extract the necessary data.

This approach is beneficial when working with dynamic websites that involve login procedures or require user interaction.

Here’s a step-by-step guide on how you can use VBA to pull data from a website:

Step 1

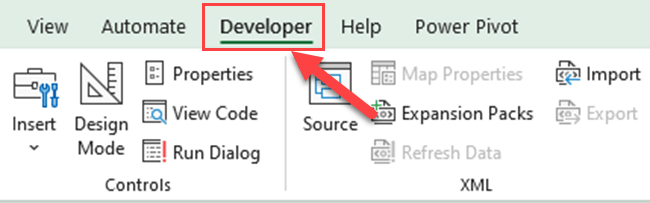

Go to the Developer tab in Excel. If you can’t see the Developer tab on your Excel Ribbon, you’ll need to enable the Developer tab in Excel.

Step 2

Open the VBA editor. Press ALT + F11 to open the Visual Basic for Applications editor.

Step 3

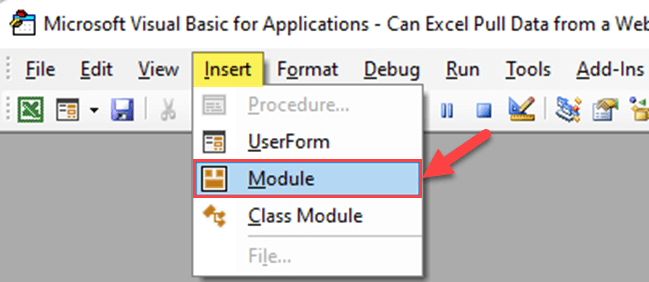

Click “Insert” in the menu, and choose “Module” to insert a new module.

Step 4

Write the necessary VBA code to automate browser navigation and extract website data. This may include creating an instance of Internet Explorer, visiting the target website (Data source), logging in (if needed), selecting data and finally scraping the required data.

Step 5

Set up the environment to use VBA in Excel:

Use the keyboard shortcut ALT+F11 to open the Visual Basic window.

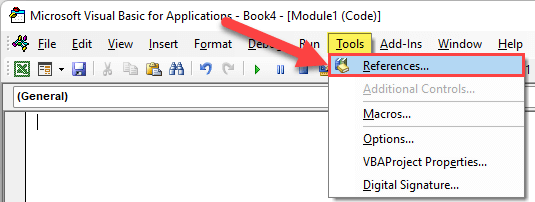

Go to the toolbar at the top and click on Tools > References.

In the “References – VBAProject” dialog box, enable the necessary libraries for web scraping, such as Microsoft HTML Object Library and Microsoft Internet Controls.

Step 6

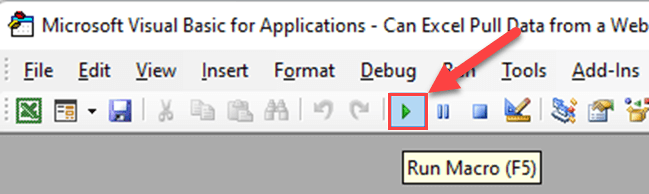

Once your code is ready, press F5 or click on the “Run” button to execute the VBA script.

VBA helps you seize the power of automation and enables effective data extraction from various websites.

3. Using Web Scraping Tools

Web scraping tools are essential in extracting data from websites and integrating it into Excel.

These data extraction tools automate the entire data scraping process, making it effortless for you to pull the required information.

Octoparse, Parsehub, and Scraper API are web scraping tools that simplify the process of extracting data and exporting it to Excel.

By using these tools, you can efficiently pull data from websites without the need for manual copying and pasting. This saves you time and effort, allowing you to focus on analyzing the data and making informed decisions.

Final Thoughts

Now that you’re familiar with the methods to extract data from websites and set up scheduled scraping in your Excel sheet, you can carry out your data scraping projects more accurately.

Apply these techniques to streamline your data-gathering process and enhance the precision of your projects. Good luck with your data-scraping endeavors!

Do you want to learn about ChatGPT 4’s Advanced Data Analysis plugin? This tool can create datasets for different industries and has some powerful features.

Watch the video below to discover how it can be used for real-time data analysis and science!

Frequently Asked Questions

How to use Excel web query for data extraction?

To use Excel web query for data extraction, follow these steps:

Open Excel and go to the “Data” tab.

Click “From Web” in the “Get & Transform Data” group.

Paste the URL of the website you want to extract data from into the “Address” field.

Press “Enter” or click “Go” to get the data available on the webpage.

Navigate the retrieved data and check the boxes for the tables or elements you want to import.

Click “Load” to import the selected data into your Excel spreadsheet.

What are the best free tools for website data extraction to Excel?

Some popular free tools for website data extraction to Excel include:

Octoparse

Import.io

ParseHub

WebHarvey

Data Miner (Chrome Extension)

These tools come with user-friendly interfaces and support multiple data extraction formats, including Excel.

How to extract data from a website on Excel for Mac users?

For Mac users, follow these steps to use Excel web query for data extraction:

Open Excel and go to the “Data” tab.

Click “New Query” and choose “From Other Sources” followed by “From Web”.

Paste the URL of the website you want to extract data from and click “OK”.

Choose the required data or tables and click “Load” to import them into your spreadsheet.

Can Google Sheets pull data from a website like Excel?

Yes, Google Sheets can pull data from a website using the IMPORTHTML, IMPORTXML, and IMPORTDATA functions. These functions allow you to import data from websites directly into your Google Sheets document.

What is the role of Chrome extensions in website data extraction to Excel?

Chrome extensions, such as Data Miner and Web Scraper, simplify the process of extracting data from websites. They allow users to collect and organize data by selecting elements on a webpage. The extracted data can then be downloaded in various formats, including Excel, for further analysis or sharing.

How does Python help in extracting data from websites to Excel?

Python, with its libraries like Beautiful Soup, Requests, and Pandas, can efficiently extract and manipulate data from websites. The extracted data can be structured and processed in Python and then exported to an Excel file using the Pandas library. This method offers advanced customization and automation in data extraction, giving you the ability to scrape and organize large amounts of data from websites.