Ever since its inception in the 1940s, the concept of artificial neural networks has captured the imagination of scientists, engineers, and tech enthusiasts alike. These networks, modeled after the human brain, have evolved over the years to become a fundamental building block of modern artificial intelligence.

A neural network is a computer system designed to work in a similar way to the human brain. It is composed of a large number of highly interconnected processing elements, called neurons, working in unison to solve specific problems. Just as the human brain learns from experience, neural networks can be trained to learn from data.

There are different types of neural networks, each tailored to specific tasks, such as image recognition, language processing, or financial forecasting. These networks have revolutionized many industries, including healthcare, finance, and transportation.

This article will dive into the fascinating world of neural networks, explore how they work, and examine their practical applications in the real world.

Let’s get into it!

Understanding The Basics Of A Neural Network

Before we can begin to comprehend the inner workings of a neural network, we must first understand the fundamental building blocks that make it up.

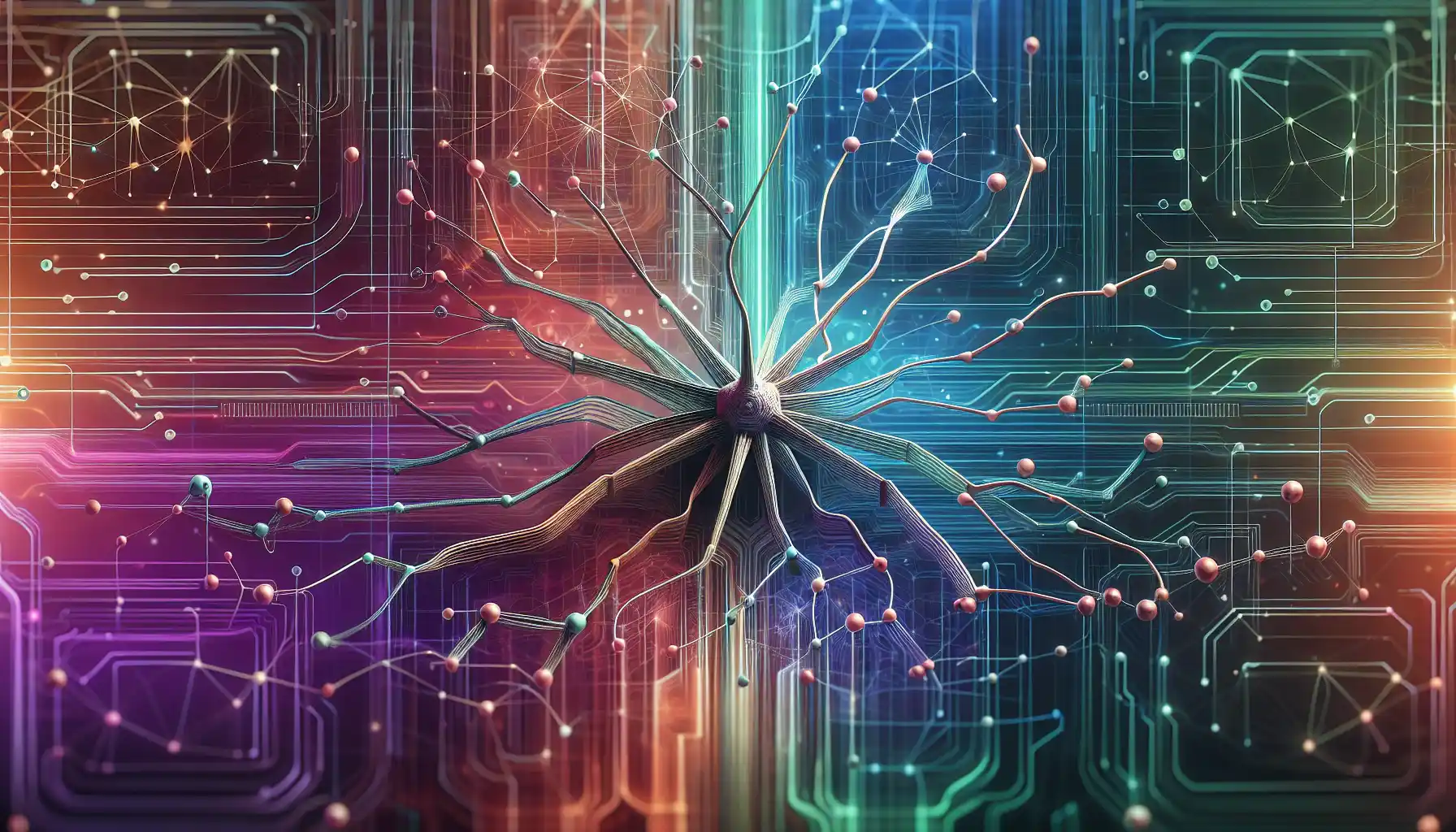

The neural network is composed of basic units called neurons. These artificial neurons are organized into layers, with each layer responsible for specific computational tasks.

A simple neural network is shown in the image below:

Layers of a Neural Network

There are typically three types of layers in a neural network:

- Input Layer: This is the first layer of the network, where data is fed into the system. It could be an image, a piece of text, or any other form of data. The number of neurons in the input layer depends on the number of features in the input data.

- Hidden Layers: These are intermediate layers between the input and output layers. Hidden layers are where the magic happens in a neural network. They perform complex computations to extract patterns and features from the input data. The number of hidden layers and the number of neurons in each hidden layer can vary, depending on the complexity of the problem.

- Output Layer: This is the final layer of the network, where the results are obtained. The number of neurons in the output layer depends on the type of problem the network is solving. For example, if it’s a binary classification problem, the output layer will have one neuron (representing the probability of one class), and if it’s a multi-class classification problem, the output layer will have multiple neurons (each representing the probability of a different class).

Neurons in a Neural Network

Each neuron in a neural network has the following components:

- Input: Each neuron receives input from multiple neurons in the previous layer. Each input is associated with a weight, which represents the strength of the connection.

- Weights: These are the parameters that the neural network learns during the training process. They determine the importance of each feature in making a prediction. The network adjusts these weights to minimize the error in its predictions.

- Activation Function: The weighted sum of the inputs is passed through an activation function, which introduces non-linearity into the network. This allows the neural network to learn complex patterns and relationships in the data.

- Output: The result of the activation function is the output of the neuron, which is passed as input to the neurons in the next layer.

- Bias: The bias is an additional parameter in each neuron that allows the network to make fine adjustments to the output. It can be thought of as the y-intercept in a linear equation.

- Activation Function: The activation function decides whether a neuron should be activated or not by calculating the weighted sum and adding a bias to it. The activation function can be linear or non-linear.

The goal of a neural network is to learn the optimal weights and biases to make accurate predictions. This is achieved through a process called backpropagation.

In backpropagation, the network compares its predictions with the actual target values and adjusts the weights and biases to minimize the error. This process is repeated iteratively until the network achieves the desired level of accuracy.

Types of Neural Networks

The artificial neural network is a complex and versatile tool that has been adapted to a wide range of applications, resulting in the development of various types of neural networks.

Each type is tailored to specific tasks, such as image recognition, language processing, or financial forecasting. Below are some of the most common types of neural networks:

- Feedforward Neural Networks: These are the simplest form of neural networks, where the data moves in one direction, from the input layer through the hidden layers to the output layer. They are used for tasks such as classification and regression.

- Convolutional Neural Networks (CNNs): CNNs are designed for image recognition and processing. They use a specialized architecture to process visual data efficiently. CNNs are widely used in applications such as object detection, facial recognition, and image classification.

- Recurrent Neural Networks (RNNs): RNNs are designed for sequential data, where the order of the data points is important. They have connections that loop back, allowing them to maintain a memory of previous inputs. RNNs are used in applications such as natural language processing, speech recognition, and time series prediction.

- Long Short-Term Memory (LSTM) Networks: LSTMs are a specialized type of RNN that are particularly good at learning long-term dependencies in data. They are widely used in tasks such as language translation, speech recognition, and handwriting recognition.

- Generative Adversarial Networks (GANs): GANs are a type of neural network architecture that is used to generate new data samples that are similar to a given dataset. They consist of two networks, a generator, and a discriminator, that are trained together in a competitive setting.

- Autoencoders: Autoencoders are a type of neural network that learns to encode input data into a compact representation and then decode it back into the original data. They are used for tasks such as data compression, denoising, and feature learning.

- Recursive Neural Networks (RecNNs): RecNNs are designed to operate on structured data, such as trees or graphs. They can be used in tasks such as parsing, semantic role labeling, and sentiment analysis.

- Spiking Neural Networks (SNNs): SNNs are a type of neural network that closely mimics the behavior of biological neurons. They use a different computational model based on the timing of spikes, or action potentials, in the neurons. SNNs are used in applications such as neuromorphic computing and spiking neural control systems.

- Radial Basis Function Networks (RBFNs): RBFNs are a type of feedforward neural network that use radial basis functions as activation functions. They are particularly well-suited for tasks such as function approximation, classification, and time series prediction.

The above are some of the most popular types of neural networks that are in use today. In practice, neural networks are often combined or modified to create hybrid architectures that can address the specific requirements of a given task.

How Do Neural Networks Learn?

Artificial neural networks are a class of machine learning models that can learn from data. Learning in neural networks is a two-step process:

- Forward Pass: During the forward pass, the input data is fed into the network, and the network makes a prediction. The prediction is compared to the actual target value, and the error is calculated.

- Backward Pass (Backpropagation): During the backward pass, the network uses the error to update its weights and biases. This is done using an optimization algorithm, such as gradient descent, which aims to minimize the error. The weights and biases are adjusted in the direction that reduces the error.

This process is repeated for many iterations, and the network continues to learn and improve its predictions.

One of the most important aspects of neural network learning is the choice of a suitable loss function. This function measures the difference between the predicted output and the actual output. The goal is to minimize this difference by adjusting the network’s parameters.

There are different types of loss functions for different tasks, such as:

- Mean Squared Error (MSE): Commonly used for regression problems.

- Binary Cross-Entropy: Used for binary classification problems.

- Categorical Cross-Entropy: Used for multi-class classification problems.

The loss function plays a crucial role in training the network and achieving optimal performance.

What Is The Architecture of A Neural Network?

The architecture of a neural network refers to its structure and organization. It includes the number of layers, the number of neurons in each layer, and the connections between the neurons.

The architecture of a neural network depends on the specific task it is designed to solve. Common architectures include:

- Shallow Neural Networks: These networks have a small number of layers, typically an input layer, one or more hidden layers, and an output layer. Shallow networks are used for simple tasks and can be trained quickly.

- Deep Neural Networks: These networks have a large number of hidden layers, which allows them to learn complex patterns and relationships in the data. Deep networks are used for tasks such as image and speech recognition, natural language processing, and game playing.

- Fully Connected Networks: In these networks, each neuron in a layer is connected to every neuron in the previous and next layers. Fully connected networks are used in tasks such as classification and regression.

- Convolutional Networks: These networks are designed for processing grid-like data, such as images. They use a special type of layer called a convolutional layer, which allows them to learn spatial hierarchies of features.

- Recurrent Networks: These networks have connections that form cycles, allowing them to process sequential data. They are used in tasks such as language modeling, speech recognition, and time series prediction.

The architecture of a neural network is an important factor in its performance. A well-designed architecture can lead to faster training and better generalization to new data. However, designing an optimal architecture often requires a combination of domain knowledge and experimentation.

What Are The Layers of A Neural Network

A neural network is composed of multiple layers, each with a specific function. The most common layers in a neural network are:

- Input Layer: The first layer of the network that receives the input data. Each neuron in this layer represents a feature of the input. The number of neurons in the input layer is determined by the number of input features.

- Hidden Layers: Intermediate layers between the input and output layers. They are called “hidden” because their values are not directly observed in the training data. Hidden layers are where the network learns to extract and represent features from the input data.

- Output Layer: The final layer of the network that produces the output. The number of neurons in the output layer depends on the type of task the network is designed to solve. For example, in a binary classification task, there will be one output neuron representing the probability of one class. In a multi-class classification task, there will be multiple output neurons, each representing the probability of a different class.

Types of Activation Functions

In neural networks, the activation function of a neuron defines the output of that neuron for a given input. There are several types of activation functions, some of which are listed below:

- Sigmoid Function: Maps any input to a value between 0 and 1. This function was commonly used in the past but has fallen out of favor due to the “vanishing gradient” problem.

- Tanh Function: Similar to the sigmoid function but maps inputs to a value between -1 and 1. The tanh function is also prone to the vanishing gradient problem.

- Rectified Linear Unit (ReLU): The ReLU function returns 0 for negative inputs and the input value for positive inputs. It has become the most popular activation function in deep learning due to its simplicity and efficiency.

- Leaky ReLU: An extension of the ReLU function that allows a small, non-zero gradient for negative inputs. This addresses the “dying ReLU” problem, where some neurons can get stuck in a state where they never activate.

- Softmax Function: Used in the output layer of multi-class classification networks. It takes a vector of arbitrary real-valued scores and squashes them into a normalized probability distribution.

The activation function plays a crucial role in determining the capabilities and limitations of the neural network.

Regularization Techniques in Neural Networks

Regularization is a technique used to prevent overfitting in neural networks. Overfitting occurs when a model learns the training data too well, to the point that it negatively impacts its performance on new, unseen data.

Some common regularization techniques include:

- L1 and L2 Regularization: These techniques add a penalty term to the loss function, which encourages the network to keep the weights small. L1 regularization can lead to sparse weights, while L2 regularization is better for creating smoother decision boundaries.

- Dropout: This technique randomly drops a certain percentage of neurons during training, which helps prevent the network from relying too much on specific features.

- Data Augmentation: This involves creating new training data by making small perturbations to the existing data. For example, rotating, flipping, or scaling images.

- Early Stopping: This technique involves stopping the training process once the validation loss starts increasing, indicating that the model is starting to overfit.

- Batch Normalization: This technique normalizes the inputs of each layer, which can speed up training and reduce the need for other regularization techniques.

By applying these techniques, you can build neural networks that are better at generalizing to new data and are more robust in real-world scenarios.

What Are The Challenges Of Neural Networks

As powerful as neural networks are, they also come with their own set of challenges and limitations.

Some of the most common challenges include:

- Training Time: Training neural networks can be computationally expensive and time-consuming, especially for large networks with many layers and parameters. This can be a significant barrier, particularly in real-time applications where rapid decision-making is required.

- Overfitting: Overfitting occurs when a network learns the training data too well and fails to generalize to new, unseen data. It can be mitigated through techniques like dropout, early stopping, and data augmentation.

- Data Quantity and Quality: Neural networks require a large amount of labeled data to learn effectively. Obtaining high-quality data can be challenging, especially in domains where data collection is expensive or time-consuming.

- Hyperparameter Tuning: Neural networks have numerous hyperparameters, such as learning rate, batch size, and network architecture, that need to be carefully tuned to achieve optimal performance. Finding the right combination of hyperparameters can be a time-consuming process.

- Interpretability: Neural networks are often considered “black boxes” because it can be challenging to understand how they arrive at their predictions. This lack of interpretability can be a significant hurdle, especially in applications where decision-making processes need to be transparent and easily understood.

- Resource Requirements: In addition to the training time, neural networks also require substantial computational resources, including high-performance GPUs or TPUs. This can be a barrier for individuals or organizations with limited access to such hardware.

- Transfer Learning: Although neural networks have shown great success in various tasks, they often struggle with learning from small datasets. This issue is particularly challenging in domains with limited data availability, such as healthcare or certain scientific disciplines.

- Adversarial Attacks: Neural networks can be vulnerable to adversarial attacks, where small, carefully crafted perturbations to the input data can cause the network to make incorrect predictions. This can be a significant concern in security-critical applications, such as autonomous vehicles or cybersecurity.

These challenges are the result of ongoing research and development efforts, and many of them are actively being addressed by the machine learning community. As the field continues to advance, it is expected that these challenges will be mitigated, allowing for the broader and more effective application of neural networks in a wide range of domains.

How Are Neural Networks Applied in Real-World Scenarios

Neural networks have found numerous applications across a wide range of domains, from image and speech recognition to natural language processing and healthcare.

Some of the most notable applications include:

- Image and Object Recognition: Convolutional neural networks (CNNs) have revolutionized image and object recognition tasks. They are used in applications such as self-driving cars, facial recognition, and medical imaging.

- Speech and Audio Recognition: Recurrent neural networks (RNNs) and their variants, such as long short-term memory (LSTM) networks, are widely used in speech and audio recognition. They power virtual assistants like Siri and Alexa and are used in automatic speech recognition systems.

- Natural Language Processing: Neural networks have made significant advancements in natural language processing (NLP). They are used in tasks such as language translation, sentiment analysis, and chatbots. Transformers, a type of neural network, have greatly improved the state-of-the-art in NLP.

- Healthcare: Neural networks are used in various healthcare applications, including disease diagnosis, medical imaging analysis, and personalized medicine. They can help identify patterns and anomalies in large medical datasets.

- Finance: Neural networks are used in finance for tasks such as fraud detection, stock market prediction, and algorithmic trading. They can analyze large amounts of financial data and identify complex patterns that may not be apparent to human analysts.

- Gaming: Neural networks have been used in gaming to create intelligent agents that can play games at a high level. For example, AlphaGo, a neural network developed by DeepMind, defeated the world champion in the game of Go.

- Automotive Industry: Neural networks are used in the automotive industry for autonomous driving. They can process sensor data from cameras, radar, and lidar to make real-time decisions, such as steering, braking, and acceleration.

- Aerospace and Defense: Neural networks are used in aerospace and defense for tasks such as autonomous drones, target recognition, and missile guidance systems.

- Social Media and Advertising: Neural networks are used by social media platforms and advertisers for tasks such as content recommendation, user profiling, and targeted advertising.

- Energy and Utilities: Neural networks are used in the energy and utilities sector for tasks such as energy load forecasting, predictive maintenance, and grid optimization.

These are just a few examples of the many real-world applications of neural networks. As the field of artificial intelligence continues to advance, it is expected that neural networks will play an increasingly important role in a wide range of industries and domains.

Final Thoughts

Neural networks are the building blocks of modern artificial intelligence. They have revolutionized the way we solve complex problems, enabling machines to learn from data and make intelligent decisions.

As we have seen in this article, neural networks are inspired by the human brain and are composed of interconnected neurons that work together to process information. They are capable of tackling a wide range of tasks, from image and speech recognition to language processing and healthcare applications.

While neural networks have made significant advancements, there are still many challenges to overcome. These challenges are the focus of ongoing research and development, and it is expected that the field of artificial intelligence will continue to evolve, pushing the boundaries of what is possible with neural networks.

As we look to the future, it is clear that neural networks will play a central role in shaping the next generation of intelligent systems. With their ability to learn from data and adapt to new situations, neural networks hold the potential to solve some of the most pressing problems facing humanity.