Imagine a world where computers can see and understand images as well as humans do. This is the power of Convolutional Neural Networks (CNNs). They have revolutionized the field of computer vision, making significant advancements in image recognition, object detection, and image generation.

Convolutional Neural Networks (CNNs) are a type of deep learning model specifically designed for processing and analyzing visual data. Inspired by the human visual system, CNNs use a technique called convolution to automatically and adaptively learn spatial hierarchies of features from the input images. This makes them extremely effective at tasks such as image classification, object detection, and image generation.

This article will take you through the key concepts of CNNs and how to implement them in various frameworks, as well as delve into advanced topics such as transfer learning, object detection, and semantic segmentation.

By the end of it, you’ll have a solid understanding of how CNNs work and how to leverage their power to solve complex real-world problems.

Let’s get into it!

What is a Convolutional Neural Network?

Convolutional Neural Networks (CNNs) are a type of deep learning model designed for processing and analyzing visual data. They have become a staple in the field of computer vision, with applications ranging from image classification and object detection to image generation and more.

CNNs are inspired by the structure and function of the human visual cortex, where neurons respond to stimuli in overlapping regions of the visual field. This concept of local receptive fields is the basis for the convolutional layers in CNNs.

The basic architecture of a CNN consists of three main types of layers:

- Convolutional layers

- Pooling layers

- Fully connected layers

1. Convolutional Layers

The convolutional layers are the heart of CNNs. They apply a series of convolutional filters to the input image, which allows the network to automatically and adaptively learn the spatial hierarchies of features from the data.

These filters are essentially small matrix operations that detect features such as edges, textures, and patterns. As the data flows through the network, these filters are applied at different spatial locations, enabling the model to learn more complex and abstract features.

2. Pooling Layers

Pooling layers are used to reduce the spatial dimensions of the feature maps generated by the convolutional layers. This helps in controlling the number of parameters in the network and in making the learned features more invariant to scale and translation.

The most common type of pooling is max pooling, where the maximum value within a sliding window is selected as the output. Other pooling methods like average pooling can also be used.

3. Fully Connected Layers

Fully connected layers are used at the end of the network to classify the extracted features. These layers take the high-level filtered information from the previous layers and use it to classify the input into various classes (in the case of image classification tasks).

In these layers, each neuron is connected to every neuron in the previous layer, hence the name “fully connected.” The final layer uses a softmax activation function to output a probability distribution over the classes.

The image above shows the architecture of a simple CNN.

How Do Convolutional Neural Networks Work?

CNNs are complex models, but their basic operation can be broken down into three main steps:

- Convolution

- Non-linearity (ReLU)

- Pooling

1. Convolution

The key to understanding CNNs is understanding how the convolution operation works.

In the context of neural networks, the convolution operation is used to extract features from the input data. The operation involves combining the input data with a small, learnable kernel that is then moved across the input data to apply the operation at different locations.

The image above illustrates the convolution process. In the figure, the 3×3 grid is the input data, and the 2×2 grid is the kernel. The kernel multiplies the input data and then moves to the right by one step, which is called the stride.

In a CNN, the learnable kernel represents the weights that are learned during the training process. These weights are adjusted to detect specific patterns or features in the input data.

The kernel is then applied to different parts of the input data, effectively scanning the entire input to detect these features. The result of this operation is a feature map, which represents the presence of the learned features at different locations in the input data.

In the figure above, the feature map is the 2×2 grid that represents the output of the convolution operation.

2. Non-Linearity (ReLU)

The next step in the CNN process is to apply a non-linear activation function to the feature maps.

In a CNN, the most common activation function used is the Rectified Linear Unit (ReLU) function.

The ReLU function is a simple non-linear function that works by setting all negative values in the feature map to zero. This helps the network to learn more complex and abstract features.

3. Pooling

Pooling is a downsampling operation that reduces the dimensionality of the feature maps. This reduces the number of parameters and computations in the network, making it more efficient.

There are several types of pooling, but the most common is max pooling. In max pooling, the feature map is divided into non-overlapping regions, and the maximum value from each region is selected to form the pooled feature map.

The image above shows the max pooling operation. The original feature map is divided into 2×2 non-overlapping regions, and the maximum value from each region is selected to form the 2×2 pooled feature map.

By combining these three operations (convolution, non-linearity, and pooling), CNNs are able to learn increasingly complex and abstract features from the input data. This makes them highly effective at a wide range of visual tasks, such as image classification, object detection, and more.

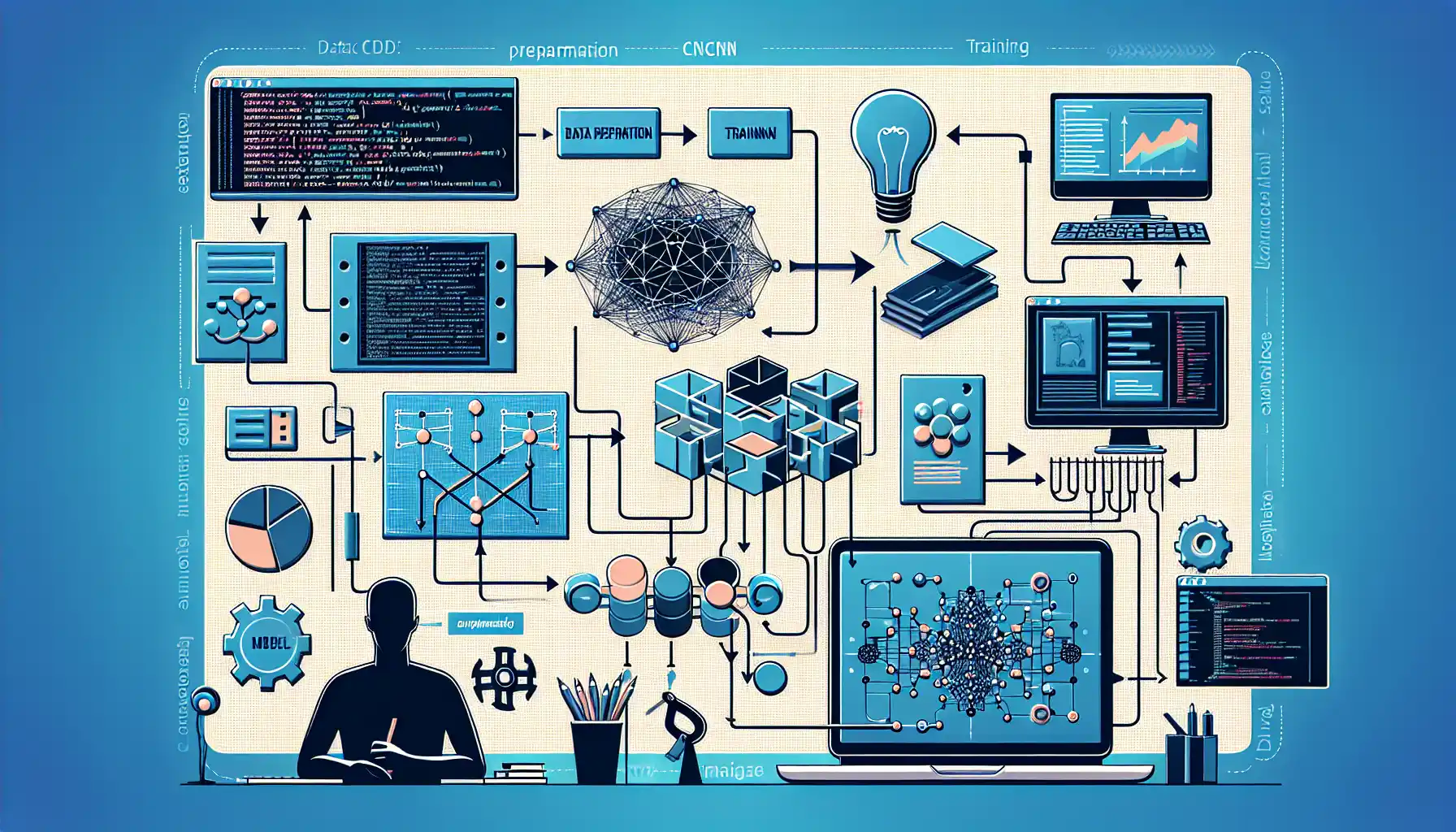

How to Build a Convolutional Neural Network?

Now that you have a basic understanding of CNNs, let’s take a look at how to build one using the Keras API. Keras is a popular deep learning library that makes building and training neural networks simple and intuitive.

Here’s a step-by-step guide to building a simple CNN for image classification using Keras:

1. Load the Data

The first step is to load the data. In this example, we’ll use the Fashion-MNIST dataset, which consists of 28×28 grayscale images of 10 different clothing items. Keras provides a convenient API to load this dataset.

Here’s how you can load the Fashion-MNIST dataset:

2. Preprocess the Data

After loading the data, you need to preprocess it before feeding it to the CNN. Preprocessing steps typically include normalizing the pixel values and reshaping the input data to the correct format.

Here’s how you can preprocess the data:

3. Build the Model

Now, let’s build the CNN model. The model will consist of several convolutional and pooling layers followed by fully connected layers at the end. We’ll also add ReLU activation functions to introduce non-linearity.

Here’s how you can build the model:

4. Compile the Model

After building the model, you need to compile it. This involves specifying the loss function, the optimizer, and the metrics that you want to track during training.

Here’s how you can compile the model:

5. Train the Model

The next step is to train the model. You can do this using the fit method, which takes the input data, the target data, the number of epochs, and the batch size as parameters.

Here’s how you can train the model:

After training the model, you can evaluate its performance on the test data.

You can make predictions using the model:

And that’s it! You’ve successfully built a simple CNN for image classification using Keras.

5 Types of Convolutional Neural Networks

1. LeNet-5

LeNet-5 is one of the pioneering CNNs that was designed for handwritten digit recognition. It was introduced by Yann LeCun in 1998 and is still used as a baseline architecture for many deep learning tasks today.

LeNet-5 consists of 7 layers, including 3 convolutional layers, 2 pooling layers, and 2 fully connected layers.

2. AlexNet

AlexNet is a landmark CNN architecture that significantly outperformed traditional methods on the ImageNet Large Scale Visual Recognition Challenge in 2012. It was developed by Alex Krizhevsky, Ilya Sutskever, and Geoffrey Hinton and was one of the first CNNs to use a deep architecture.

AlexNet consists of 5 convolutional layers followed by 3 fully connected layers. It also introduced the concept of using the ReLU activation function, which has become a standard in CNN architectures.

3. VGG-16

VGG-16 is a CNN architecture that was developed by the Visual Graphics Group at the University of Oxford. It was introduced in the 2014 ImageNet competition and is known for its simplicity and depth.

VGG-16 has 13 convolutional layers followed by 3 fully connected layers. It uses small 3×3 filters for convolution and 2×2 filters for pooling.

4. Inception-v3

Inception-v3 is part of the Inception architecture series, which was developed by Google. It is known for its use of inception modules, which are parallel subnetworks that learn different features from the input data and are then combined.

Inception-v3 has 42 inception modules and is 48 layers deep. It uses batch normalization and RMSprop for optimization.

5. ResNet-50

ResNet-50 is a CNN architecture that was developed by Kaiming He and a team at Microsoft Research. It is known for its use of residual connections, which allow for very deep networks (hence the name “ResNet”).

ResNet-50 has 48 convolutional layers and 2 fully connected layers. It uses batch normalization and the ReLU activation function.

Applications of Convolutional Neural Networks

CNNs have a wide range of applications in various fields. Let’s take a look at some of the key applications of CNNs:

1. Image Recognition

CNNs are widely used for image recognition tasks, such as identifying objects, animals, and people in images. They have been used to achieve state-of-the-art performance on large-scale image datasets like ImageNet.

2. Object Detection

CNNs can also be used for object detection, where the goal is to locate and classify objects within an image. This is a critical task in fields like autonomous vehicles, surveillance, and robotics.

3. Facial Recognition

CNNs are used in facial recognition systems to identify and verify individuals in images and videos. They can be found in security systems, social media platforms, and mobile devices.

4. Medical Imaging

CNNs have made significant advancements in medical imaging, aiding in the detection and diagnosis of various diseases. They are used in tasks such as tumor detection, organ segmentation, and pathology analysis.

5. Natural Language Processing

While not traditionally an image-related task, CNNs have been successfully applied to natural language processing tasks, such as text classification and sentiment analysis.

They can be used to extract features from text data, which can then be fed into other models for further processing.

6. Autonomous Vehicles

CNNs are a key component of the computer vision systems used in autonomous vehicles. They enable vehicles to detect and classify objects on the road, such as pedestrians, other vehicles, and road signs.

This allows the vehicles to make informed decisions in real-time, enhancing safety and efficiency.

7. Style Transfer

CNNs can be used for creative applications such as style transfer, where the artistic style of one image can be applied to another. This technique has gained popularity in the field of digital art.

CNNs are a powerful tool for processing and analyzing visual data, and their applications continue to expand as the field of deep learning advances.

Limitations and Challenges of Convolutional Neural Networks

While CNNs have made significant advancements in computer vision, they are not without limitations and challenges. Understanding these limitations is crucial for developing effective solutions and pushing the boundaries of the field. Some of the key limitations and challenges include:

- Limited Receptive Field: CNNs typically have a limited receptive field, which means they can only capture local information from the input data. This can be a problem for tasks that require understanding of global context.

- Parameter Size: CNNs can have a large number of parameters, which can make them computationally expensive and memory-intensive. This can make training and inference slow, especially on resource-constrained devices.

- Data Efficiency: CNNs require a large amount of labeled data to learn effectively. This can be a challenge in domains where labeled data is scarce or expensive to obtain.

- Adversarial Attacks: CNNs are susceptible to adversarial attacks, where small, carefully crafted perturbations to the input data can cause the model to make incorrect predictions. This is a significant security concern, especially in applications like autonomous vehicles and security systems.

- Lack of Interpretability: CNNs are often referred to as “black box” models, meaning that it can be challenging to understand how they arrive at their predictions. This lack of interpretability can be a barrier to their adoption in fields where transparency is essential, such as healthcare and law enforcement.

- Invariance and Equivariance: CNNs are designed to be invariant to certain transformations (such as translation and rotation) and equivariant to others. However, achieving the right balance of invariance and equivariance for a given task can be challenging and may require careful design and tuning.

- Overfitting: CNNs can be prone to overfitting, where they learn to perform well on the training data but fail to generalize to new, unseen data. Regularization techniques and careful hyperparameter tuning are required to mitigate this issue.

- Transfer Learning and Fine-Tuning: Transfer learning and fine-tuning are common approaches to address the limited data efficiency of CNNs. These techniques leverage pre-trained models on large datasets, and then adapt them to new tasks with smaller datasets. However, transfer learning and fine-tuning require careful selection of the pre-trained model and the new task, and can still suffer from the aforementioned challenges.

While CNNs have revolutionized the field of computer vision and continue to be a driving force behind many cutting-edge applications, it is important to recognize their limitations and challenges.

Researchers and practitioners are actively working on addressing these issues, and the field of CNNs continues to evolve rapidly.

Final Thoughts

In this article, you’ve learned about one of the most exciting and revolutionary areas of machine learning — Convolutional Neural Networks (CNNs).

CNNs have revolutionized the field of computer vision and have become a staple in image processing and pattern recognition tasks.

We started by discussing the basic architecture of CNNs, focusing on the three main types of layers: convolutional layers, pooling layers, and fully connected layers.

We also delved into the inner workings of CNNs, explaining how the convolution, ReLU activation, and pooling operations work together to extract features from input data.

Then, we moved on to building a simple CNN using Keras and explored the five key CNN architectures: LeNet-5, AlexNet, VGG-16, Inception-v3, and ResNet-50.

We also delved into the applications of CNNs, from image recognition to object detection, and even touched on some of the limitations and challenges that CNNs face.

CNNs have opened up a world of possibilities for the field of computer vision, and as technology continues to advance, we can expect to see even more exciting developments in this area.

Frequently Asked Questions

How are CNNs different from traditional neural networks?

CNNs are specifically designed to process and analyze visual data, whereas traditional neural networks are more general-purpose and can be used for a wide range of tasks.

The key difference lies in the architecture and the types of layers used. CNNs use convolutional layers, pooling layers, and fully connected layers, while traditional neural networks typically consist of fully connected layers only.

What are the main components of a CNN?

The main components of a CNN are:

- Convolutional layers: These layers apply a series of learnable filters to the input data, extracting features from the data.

- Pooling layers: These layers reduce the spatial dimensions of the feature maps, making them more invariant to scale and translation.

- Fully connected layers: These layers take the high-level filtered information from the previous layers and use it to classify the input data.

What is the training process for a CNN?

The training process for a CNN involves:

- Initializing the weights and biases.

- Forward propagation: Passing the input data through the network to obtain predictions.

- Calculating the loss: Comparing the predictions with the actual labels.

- Backpropagation: Updating the weights and biases to minimize the loss.

- Repeating steps 2-4 for multiple epochs until the model converges.

What are some popular CNN architectures?

Some popular CNN architectures include:

- LeNet-5: One of the first CNN architectures developed by Yann LeCun for handwritten digit recognition.

- AlexNet: A landmark CNN that significantly outperformed traditional methods on the ImageNet Large Scale Visual Recognition Challenge in 2012.

- VGG-16: A CNN architecture with 16 layers developed by the Visual Graphics Group at the University of Oxford.

- Inception-v3: A CNN architecture with 48 layers developed by Google that is known for its use of inception modules.

- ResNet-50: A CNN architecture with 50 layers developed by Microsoft Research that is known for its use of residual connections.

How can I implement a CNN in TensorFlow or Keras?

To implement a CNN in TensorFlow or Keras, you can follow these steps:

- Load and preprocess the data.

- Define the CNN architecture by specifying the layers, activation functions, and loss functions.

- Compile the model by specifying the optimizer and loss function.

- Train the model using the fit method.

- Evaluate the model on a test set using the evaluate method.

- Make predictions on new data using the predict method.

What are some common applications of CNNs?

CNNs are widely used in applications such as:

- Image recognition and classification.

- Object detection and localization.

- Facial recognition and biometric authentication.

- Medical image analysis and diagnosis.

- Autonomous vehicles for scene understanding and navigation.

- Natural language processing for tasks like text classification and sentiment analysis.