Going for a job as a data engineer? Need to nail your Python proficiency? Well, you’re in the right place!

We have rounded things down to the dirty 30. You need to know these questions before getting ready for a data engineer interview.

Here are some python related data engineer interview questions to get you ready:

Explain the difference between a list and a tuple in Python. When would you choose one over the other in a data engineering task?

What is the purpose of the SELECT statement in SQL, and how would you use it to fetch specific columns from a table in a relational database?

In Pandas, what method would you use to drop rows with missing values from a DataFrame? Provide a brief example of its usage.

Why is JSON commonly used in NoSQL databases, and how would you query nested data structures in a MongoDB collection using Python?

But wait, there’s more, many more.

Read on.

Remember, by being prepared for these and other questions and topics, you can confidently demonstrate your expertise in Python during a data engineer interview.

Let’s dive in!

7 Reasons Why Python is Important for Data Engineers

Firstly, why even learn Python? Why is it important?

Well, Python has emerged as a powerhouse in the realm of data engineering due to the following 7 reasons:

Versatility: Adaptable for diverse tasks, from data cleaning to advanced analytics.

Libraries and Frameworks: Robust tools like Pandas and PySpark enhance data manipulation and processing capabilities.

Community Support: A vibrant community accelerates learning and problem-solving.

Integration Flexibility: Seamless integration with databases, storage solutions, and cloud platforms.

Ease of Adoption: Beginner-friendly syntax enables quick onboarding and increased productivity.

- Scalability: Handles large-scale projects and distributed computing effectively.

Interdisciplinary Applicability: Used across various data-related domains, fostering collaboration and a holistic approach.

Also, Python is indispensable in modern data engineering and testing your Python abilities is a crucial part of your data engineer interview process.

Now let’s get into what Python concepts you need to know and some data engineer interview questions and answers you can expect.

We will break this into core topics.

Topic 1: Core Python Concepts for Data Engineers

Data engineers working with Python need a solid understanding of core concepts to excel in interviews and tackle real-world challenges.

Here are critical concepts along with potential interview questions:

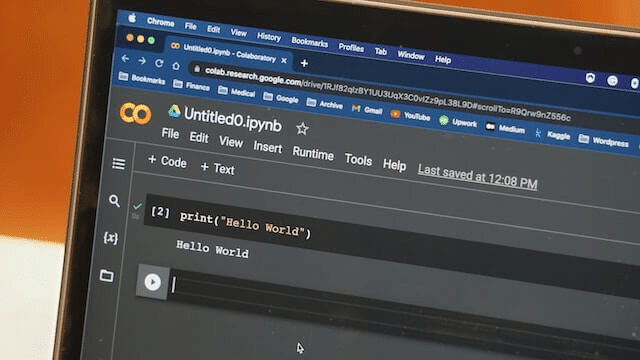

1. Fundamental Syntax

Python is known for its clean and readable syntax, which plays a significant role in the language’s popularity. When learning Python, you should be comfortable with:

The function and variable declarations

If-else statements

For and while loops, and other basic code constructs.

For example, you can work with f-strings to easily format string values, like so:

name = "John"

age = 30

print(f"My name is {name} and I am {age} years old")Interview Question Sample 1: Function and Variable Declarations

Explain the difference between a function and a variable in Python. Provide an example of each and illustrate how you would use them in a data engineering context.

Interview Question Sample 2: If-Else Statements and Loops

How would you use if-else statements to handle a specific condition in a data processing script? Additionally, demonstrate the use of a for loop to iterate over a dataset and perform a basic operation on each element.

Interview Question Sample 3: Basic Code Constructs in Python:

Discuss the significance of for and while loops in Python. Provide an example of when you’d choose a for loop over a while loop in a data engineering task, elucidating the advantages of your choice.

2. Data Structures

Python provides various built-in data structures that simplify data manipulation tasks. Understanding how to use a data structure efficiently is crucial for a data engineer.

Some of the most commonly used data structures are:

Lists: Mutable sequences of elements.

Tuples: Immutable sequences of elements.

Sets: Unordered collections of unique elements.

Dictionaries: Key-value pairs of elements.

Here is an example of declaring and working with tuples:

example_tuple = (1, 2, 3)

print(example_tuple[0]) # Prints 1Interview Question Sample 4: Lists and Tuples

Compare and contrast lists and tuples in Python. When would you choose to use a list over a tuple, and vice versa, in a data engineering scenario? Provide an example demonstrating the practical use of both.

Interview Question Sample 5: Dictionaries and Sets

Explain the fundamental differences between dictionaries and sets in Python. Share a use case where using a dictionary is more suitable than using a set in a data engineering context.

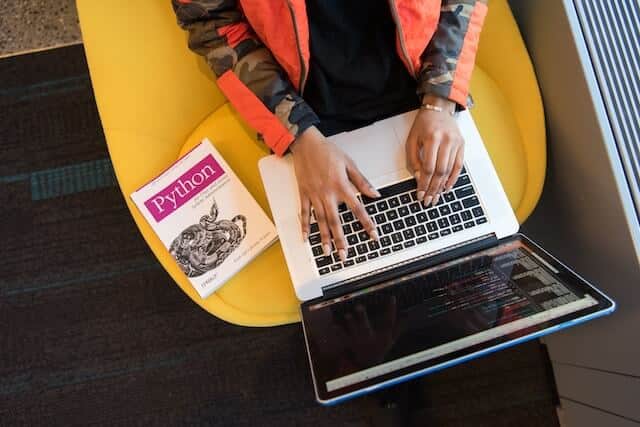

3. Python Libraries

There are numerous Python libraries that you’ll frequently use in your data engineer tasks. Some of the most important libraries for data processing and analysis include:

NumPy: A library that provides powerful data manipulation and mathematical functions.

Pandas: A library for data analysis with easy-to-use data structures like DataFrames.

SQLAlchemy: A popular Object Relational Mapper (ORM) for working with SQL databases.

Matplotlib: This library is essential for data visualization. It allows you to create various types of graphs, such as bar charts, line charts, and scatter plots.

These libraries, along with many others, will help you in your day-to-day tasks as a data engineer. When working with any new library, make sure to explore its documentation thoroughly and understand its capabilities.

Interview Question Sample 6: NumPy and Pandas

Explain the primary differences between NumPy and Pandas. When would you choose to use NumPy over Pandas or vice versa in a data engineering project? Provide a specific example showcasing the application of each library.

Interview Question Sample 7: SQLAlchemy and Database Interaction

Discuss the role of SQLAlchemy in data engineering, particularly concerning its use as an Object Relational Mapper (ORM). How would you utilize SQLAlchemy to interact with a SQL database in a data processing task? Provide a brief example to illustrate your answer.

4. Functions and Modules

There are various Python concepts that you’ll frequently use in your data engineering tasks. Some of the most crucial concepts for structuring and organizing your code include:

Functions: Defined blocks of reusable code that perform a specific task. Functions enhance code modularity and maintainability.

Modules: Python files containing reusable code, including variables, functions, and classes. Modules facilitate code organization and reusability across projects.

Understanding how to effectively use functions and modules is vital for streamlining code development and maintenance in data engineering projects.

Interview Question Sample 8: Functions in Python

Explain the purpose of functions in Python and how they contribute to code modularity. Provide an example of a scenario in data engineering where using a function would improve code readability and reusability.

Interview Question Sample 9: Modules and Code Organization

Discuss the role of modules in Python and how they aid in code organization. When would you choose to create a separate module for a set of functions in a data engineering project? Provide an example illustrating the benefits of using modules for code management and maintenance.

Remember, mastering Python programming basics is essential for any data engineer. Keep practicing to strengthen your knowledge, and always stay up-to-date with new Python features and libraries. Now let’s look at some data science concepts you need to be familiar with and some interview questions you can expect.

Topic 2: Data Science Concepts

In the realm of data scientists , a solid understanding of core Python concepts is fundamental for success. Aspiring data engineers need to be well-versed in the following areas to effectively tackle data-related challenges.

1. Handling Structured and Unstructured Data

In your data engineer role, you will often work with both structured and unstructured data. Structured data refers to data that follows a fixed format, such as data stored in relational databases.

Unstructured data includes different formats like text, images, or audio files. Python, along with its libraries, is an excellent tool for handling both types of data.

Pandas is a powerful library for handling structured data. It provides DataFrames, which are used for organizing and manipulating tabular data efficiently. In contrast, for unstructured data, you can rely on libraries like BeautifulSoup and NLTK for web scraping and natural language processing, respectively.

Interview Question Sample 10: Pandas and DataFrames (Structured Data)

Explain the role of Pandas in handling structured data, and how does it utilize DataFrames? Provide a specific example of a data engineering task where using Pandas and DataFrames would be beneficial, and walk through the steps you would take.

Interview Question Sample 11: BeautifulSoup and NLTK (Unstructured Data)

Discuss the significance of libraries like BeautifulSoup and NLTK in handling unstructured data. Provide an example scenario where you would use BeautifulSoup for web scraping and NLTK for natural language processing in a data engineering project. Outline the steps involved in processing unstructured data using these libraries.

2. Data Preprocessing

Cleaning data is vital in data science. Python, with Pandas and NumPy, makes it easy. Pandas has functions like dropna() for missing data and drop_duplicates() for removing repeats. NumPy helps with number operations.

For dealing with categories, Python offers LabelEncoder and OneHotEncoder in SciKit-Learn. To split data for training and testing, use train_test_split(). Python has you covered for all your data prep needs.

Interview Question Sample 12: Data Cleaning with Pandas and NumPy

Explain the role of Pandas in data cleaning. Provide examples of situations where you would use dropna() and drop_duplicates() functions. Additionally, discuss how NumPy complements Pandas in data engineering tasks, particularly in handling numerical operations.

Interview Question Sample 13: Handling Categorical Data and Data Splitting

Describe the purpose of LabelEncoder and OneHotEncoder in handling categorical data. How do these tools contribute to data preprocessing in Python, and can you provide an example scenario where you would use them? Furthermore, explain the significance of train_test_split() in preparing data for machine learning models.

3. Database Types

In a Python interview for a data engineer role, you might encounter questions about different database types. Understanding these distinctions is crucial for effective data handling.

The two primary types are:

A. Relational Databases

These databases organize data into tables with rows and columns, following a predefined schema.

Relational databases include:

PostgreSQL

MySQL

Microsoft SQL Server.

Python connects to relational databases using libraries like psycopg2 and mysql-connector. Object-Relational Mappers (ORMs) like SQLAlchemy provide a Pythonic way to interact with relational databases.

Interview Question Sample 14: Python Connectivity to Relational Databases

Explain how psycopg2 and mysql-connector connect Python to databases like PostgreSQL and MySQL. Mention briefly how SQLAlchemy enhances this interaction. Provide a concise example using either psycopg2 or mysql-connector in a data engineering task.

Interview Question Sample 15: Relational Database Basics

Explain the core structure of a relational database—how it organizes data with tables, rows, and columns. Give an example scenario where a relational database is the preferred choice for data storage.

B. NoSQL Databases

NoSQL databases are more flexible, accommodating various data structures like key-value pairs, graphs, or documents. They don’t rely on a fixed schema.

NoSQL databases include:

MongoDB

Cassandra

Redis.

Python interfaces with NoSQL databases through libraries like pymongo for MongoDB. NoSQL databases are especially useful when dealing with large volumes of unstructured or semi-structured data.

Interview Question Sample 16: NoSQL Database Flexibility

Explain the key characteristic that distinguishes NoSQL databases from relational databases. Why are NoSQL databases considered more flexible, and how does this flexibility cater to different data structures?

Interview Question Sample 17: Python and NoSQL Connectivity

Name a Python library for connecting to MongoDB, a NoSQL database. Explain how this library, like pymongo, helps Python work with MongoDB. Give a brief example of using pymongo in a data engineering task with unstructured or semi-structured data.

Now you will almost always be asked SQL related features in your data engineering interview so here are the concepts you need to know and some interview questions you can use to prepare.

Topic 3: SQL Concepts

1. SQL Queries

In the realm of data engineering interviews, a robust grasp of SQL queries is indispensable. These queries serve as the foundation for fetching and manipulating data within relational databases.

Key SQL commands crucial for a data engineer include:

SELECT: Essential for retrieving data from the database.

INSERT: Used to add new data to the database.

UPDATE: Employed to modify existing data in the database.

DELETE: Utilized for removing data from the database.

Moreover, it’s imperative for data engineers to be adept at employing advanced SQL functionalities. Proficiency in using clauses like JOIN and GROUP BY, along with aggregating functions, is crucial for optimizing and refining queries in a Python-centric data engineer environment.

Interview Question Sample 18: Duplicate Data Points

How would you handle duplicate data points in an SQL query?

Interview Question Sample 19: Advanced SQL Functionalities

Why is proficiency in advanced SQL functionalities, such as JOIN and GROUP BY, important for a data engineer? Can you illustrate a situation where utilizing these clauses and aggregating functions would optimize a query in a Python-centric data engineering task?

2. JSON and NoSQL

In a Python data engineer’s interviews, NoSQL databases often use JSON for storing data. JSON is lightweight, readable, and easy to parse.

JSON documents in NoSQL databases, like MongoDB’s BSON format, accommodate complex data structures. As a data engineer, mastering JSON querying (e.g., MongoDB’s find() and aggregate() methods) and index creation for improved query performance is crucial.

Interview Question Sample 20: Importance of JSON in NoSQL

Explain the significance of using JSON in NoSQL databases for a data engineer. Why is it preferred, and how does it contribute to the flexibility of data storage?

Interview Question Sample 21: JSON Querying in MongoDB

In the context of MongoDB, why is mastering JSON querying important for a data engineer? Provide a brief explanation of the find() and aggregate() methods and their role in extracting information from JSON documents.

Next let’s look at some of the data engineering tools and frameworks concepts and questions you should be familiar with.

Topic 4: Data Engineering Tools and Frameworks

In anticipation of a Python data engineering interview, gaining proficiency in essential tools and frameworks is paramount. Let’s explore key categories crucial for data engineers and how to prepare for them:

1. ETL and Data Modeling

ETL Process: Understand the Extract, Transform, Load (ETL) process, involving tools like Apache NiFi, Talend, and Microsoft SQL Server Integration Services (SSIS). Familiarize yourself with transforming data from diverse sources to fit specific requirements.

Data Modeling Techniques: Grasp data modeling concepts, employing techniques like star schema and snowflake schema. Gain insight into data warehousing principles, including OLAP (Online Analytical Processing) and OLTP (Online Transactional Processing).

Interview Question Sample 22: ETL Proficiency

Explain the Extract, Transform, Load (ETL) process and its significance in data engineering. Can you provide an example of how you’ve used ETL tools like Apache NiFi, Talend, or Microsoft SQL Server Integration Services (SSIS) to transform data from diverse sources for a specific project?

Interview Question Sample 23: Data Modeling Techniques

Describe the difference between star schema and snowflake schema in data modeling. How do these techniques contribute to efficient data management, and in what scenarios would you choose one over the other?

2. Apache Hadoop and Spark

Apache Hadoop: Explore Apache Hadoop, an open-source framework for distributed storage and large dataset processing. Delve into components like Hadoop Distributed File System (HDFS), MapReduce, and YARN for effective data management, focusing on the role of a data file within HDFS.

Consider integrating a data warehouse schema for optimized querying and analysis within the Hadoop ecosystem, ensuring compatibility with relational databases for enhanced analytics.

Apache Spark: Develop a strong understanding of Apache Spark, a robust distributed computing engine with fast data processing capabilities. Recognize its advantages over Hadoop’s MapReduce, particularly in terms of in-memory processing. Hands-on experience with both Hadoop and Spark is valuable.

Interview Question Sample 24: Apache Hadoop Fundamentals

Briefly explain the fundamentals of Apache Hadoop and its role in distributed storage and processing. Name and describe two key components of Hadoop, highlighting their significance in effective data management.

Interview Question Sample 25: Apache Spark Advantages

Outline the advantages of Apache Spark over Hadoop’s MapReduce, with a focus on in-memory processing. How does Spark enhance data processing speed, and in what scenarios would you choose Apache Spark over Apache Hadoop for a data engineering task?

To round out your interview preparation, here are some advanced python concepts to refamiliarize yourself with.

Topic 5: Advanced Python Topics for Data Engineers

In the dynamic field of data engineering, a strong command of advanced Python topics is essential. Here are key areas that data engineers should master, along with interview questions to assess their proficiency:

1. Concurrency and Parallelism

Understand the concepts of concurrency and parallelism in Python to optimize data processing tasks by efficiently utilizing system resources.

Interview Question Sample 26:

Can you explain the difference between concurrency and parallelism in Python? How would you implement concurrent or parallel processing to enhance the performance of a data processing script?

2. Decorator Functions

Explore the use of decorator functions to enhance code modularity and readability, a crucial aspect of maintaining scalable and efficient data engineering projects.

Interview Question Sample 27:

What are decorator functions in Python, and how can they be beneficial in the context of data engineering? Provide an example of how you would use a decorator function to improve the functionality of a data processing task.

3. Memory Management

Dive into advanced memory management techniques in Python to optimize memory usage in data-intensive applications.

Interview Question Sample 28:

How does Python manage memory, and what strategies would you employ to optimize memory usage in a data engineering project dealing with large datasets?

4. Metaprogramming

Explore metaprogramming concepts to dynamically alter the behavior of Python code, enabling more flexible and adaptable data engineering solutions.

Interview Question Sample 29:

What is metaprogramming in Python, and in what scenarios would you consider using metaclasses to modify class behavior in a data engineering project?

5. Asynchronous Programming

Embrace asynchronous programming to handle concurrent tasks efficiently, particularly useful in scenarios where data retrieval or processing involves waiting.

Interview Question Sample 30:

How does asynchronous programming differ from synchronous programming in Python? Provide an example of a data engineering task where asynchronous programming would offer performance benefits.

These advanced Python topics not only showcase a data engineer’s depth of knowledge but also their ability to implement sophisticated solutions to tackle the challenges of modern data processing.

Now that you have a good grasp of the topics you might encounter in your interview let’s talk about how to ace the interview.

How to Prepare for a Python Interview

Preparing for a Python interview as a data engineer requires a combination of technical expertise and soft skills. Here’s a guide to ensure you’re well-equipped for the challenges:

Technical Skills Refinement

Review Core Python Concepts: Ensure a solid understanding of core Python concepts such as data structures, loops, and functions. Practice coding exercises to reinforce your proficiency.

Deepen SQL Knowledge: Brush up on SQL queries, especially those related to data manipulation and retrieval. Be prepared to showcase your ability to work with relational databases.

Explore Python Libraries: Familiarize yourself with key Python libraries relevant to data engineering tasks, such as Pandas, NumPy, and SQLAlchemy. Practice implementing solutions using these tools.

Advanced Python Topics: Delve into advanced Python topics, including concurrency, decorator functions, memory management, metaprogramming, and asynchronous programming. Understand how these concepts apply to data engineering scenarios.

Beyond technical skills there are also soft skills you should practice.

Soft Skills for Data Engineers

Problem-Solving Skills: Demonstrate your ability to analyze business problems and identify their root causes. Showcase innovative and efficient problem-solving approaches. Highlight instances where you adapted your approach when faced with unexpected challenges.

Business Acumen: Understand the industry and the specific business environment. Establish connections between data and decision-making processes to align data engineering efforts with organizational needs. Illustrate experiences collaborating with business analysts and stakeholders to deliver outcomes that address business objectives.

Remember that the learning journey never ends with Python. Here is how to stay ahead of the curve.

Continuous Learning and Preparation

Stay Updated on Industry Trends: Keep abreast of the latest developments in the data engineering field, including emerging technologies, tools, and best practices.

Practice Mock Interviews: Conduct mock interviews to simulate the interview environment. Seek feedback on both technical and soft skills aspects.

Build a Portfolio: Showcase your projects, highlighting the technical challenges you’ve overcome and the business impact of your work.

By combining technical expertise with strong problem-solving and business acumen, you’ll present yourself as a well-rounded data engineer ready to excel in a Python interview.

Final Thoughts

In the fast-evolving landscape of data engineering, a solid grasp of Python is instrumental for success. Remember, it’s not just about syntax proficiency; it’s about showcasing your ability to apply Python to real-world data challenges.

By combining technical acumen with problem-solving skills and a deep understanding of business context, you’ll not only ace Python interviews but also contribute significantly to the evolving realm of data engineering.

Best of luck on your Python interview journey!

Frequently Asked Questions

What are the key differences between Pandas and NumPy?

While both Pandas and NumPy are essential libraries for data manipulation and analysis in Python, they have some key differences. NumPy primarily focuses on numerical computations using arrays, while Pandas provides powerful data structures such as DataFrames and Series for handling structured data.

With Pandas, you can easily perform operations like filtering, grouping, and joining data, making it a better choice for handling complex data. On the other hand, NumPy excels at performing mathematical operations, such as matrix multiplication and element-wise operations, on large arrays.

How do you handle large datasets with Python?

Handling large datasets in Python can be challenging due to limitations in memory and computational power. However, there are several techniques you can employ to overcome these challenges:

Opt for the efficient data structures and libraries such as Pandas or Dask for data manipulation.

Use tools like SQLite or other SQL databases to store and efficiently query data.

Process the data in chunks by reading, processing, and writing a smaller subset of the data at a time.

Employ parallel or distributed computing techniques, such as using the multiprocessing library or setting up a cluster with Apache Spark, to improve computational power.

Can you explain the map-reduce paradigm in Python?

The map-reduce paradigm is a programming technique used to perform parallel processing on large datasets. In Python, the map function applies a given function to every element of an iterable (e.g., a list) and returns a new iterable with the results.

The reduce function, provided by the functools library, combines the elements of an iterable using a specified function, ultimately reducing the iterable to a single accumulated value.

The map-reduce model follows this two-step process:

Map: Apply a function to each element in the input data, generating a set of intermediate results.

Reduce: Combine the intermediate results using a reduction function, producing the final output.

This paradigm provides an efficient way to parallelize and distribute processing tasks, making it ideal for handling large datasets.

Describe how to merge datasets using Python?

In Python, you can use the Pandas library to merge datasets with the help of the merge function. The merge function combines two DataFrames based on a specified key column or index using various types of database-style joins, such as inner, outer, left, and right. You can also control how the data is combined, using parameters like on, left_on, right_on, and how.

For example:

import pandas as pd

df1 = pd.DataFrame(...)

df2 = pd.DataFrame(...)

merged_df = pd.merge(df1, df2, on='key_column', how='inner')This code snippet demonstrates merging two DataFrames (df1 and df2) using an inner join on the specified key_column.

How do you optimize performance for an ETL pipeline in Python?

To optimize the performance of an ETL (Extract, Transform, Load) pipeline in Python, consider the following best practices:

Use efficient libraries like Pandas or Dask for manipulating and processing data.

Optimize your data transformation steps by utilizing built-in functions and avoiding loops.

Make use of parallel processing or multiprocessing libraries when transforming large datasets.

Use in-memory caching or data storage solutions to speed up data retrieval.

Monitor and profile your code to identify bottlenecks and iteratively optimize performance.

Explain the use of generators in Python.

In Python, generators are a type of iterator that allow you to create and return a sequence of values on-the-fly, without computing or storing all the values in memory upfront.

Generators are defined using a special type of function that includes the yield keyword, which suspends execution and returns a value to the caller. When a generator function is called, it returns a generator object that can be iterated over using a for loop or the next() function.

Generators are particularly useful when working with large data sets or streaming data, as they allow you to process data one piece at a time, significantly reducing the memory footprint.