Neural networks are a critical component of artificial intelligence and machine learning. They are designed to replicate the way the human brain processes and analyzes information, enabling machines to learn from data and make decisions.

Neural networks can be applied to various tasks, such as image recognition, speech processing, and even playing video games. The various types of neural networks are designed to address specific problems and are more effective in certain applications than others.

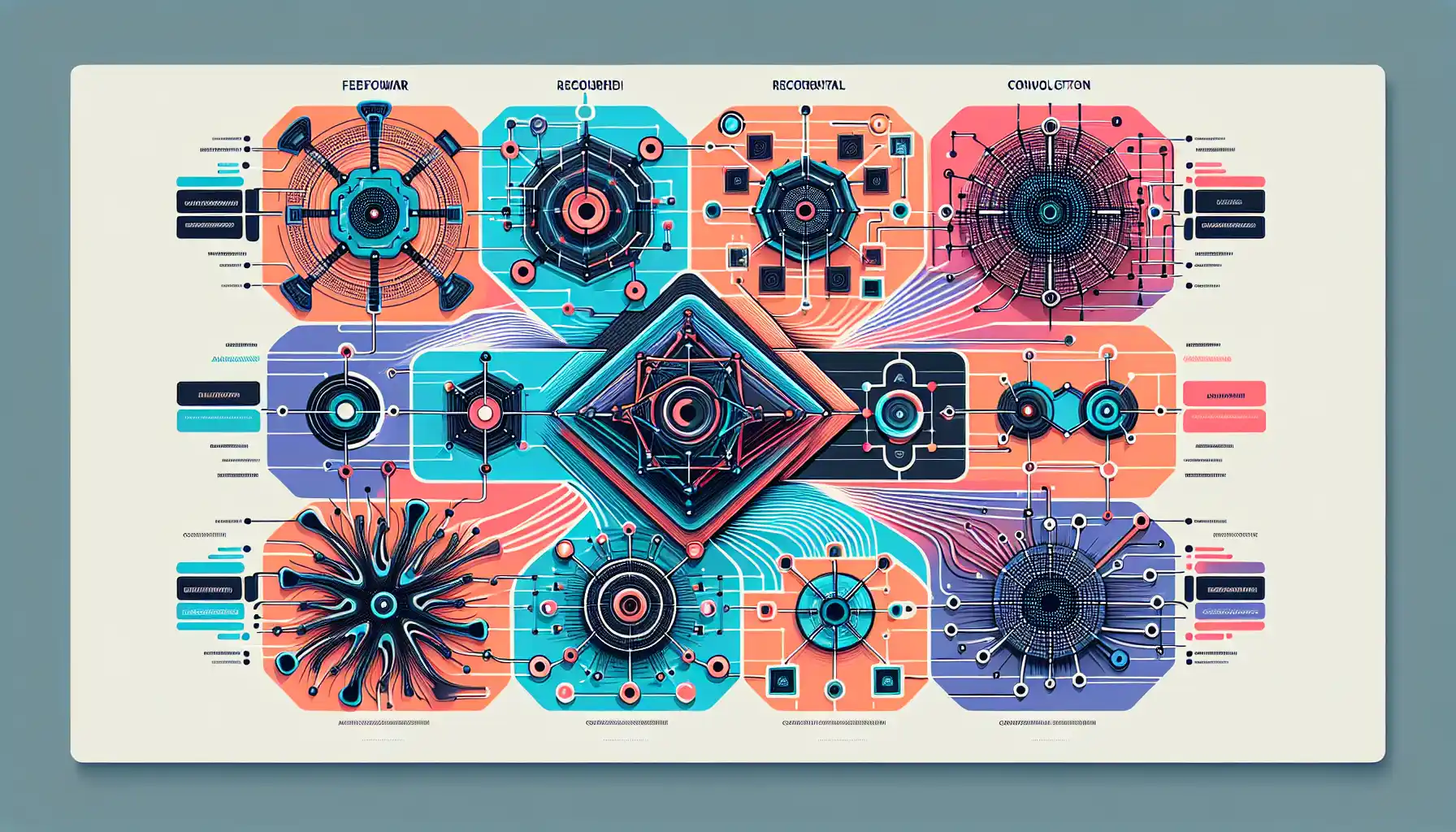

The main types of neural networks are:

- Feedforward Neural Networks

- Convolutional Neural Networks (CNN)

- Recurrent Neural Networks (RNN)

- Long Short-Term Memory Networks (LSTM)

- Gated Recurrent Units (GRU)

- Autoencoder Neural Networks

- Generative Adversarial Networks (GAN)

- Deep Belief Networks (DBN)

- Radial Basis Function Networks (RBF)

This article will explain what each of these types of neural networks are and what they are used for.

But before we get to the main types of neural networks, let’s go over the basics of neural networks.

Understanding the Basics of Neural Networks

Neural networks, also known as artificial neural networks, are a computational model that mimics the way the human brain processes information.

They consist of a large number of interconnected processing elements, called neurons, which work together to perform complex tasks.

These neurons are organized into layers, and the connections between neurons are represented by weights. The network learns by adjusting these weights based on input data and desired output.

A simple neural network has three main layers:

- Input Layer: This is the layer that receives input data and passes it on to the next layer.

- Hidden Layers: These are intermediate layers between the input and output layers. They are responsible for extracting features and patterns from the input data.

- Output Layer: This is the final layer that produces the output of the network based on the processed information from the hidden layers.

When the input data is fed into the neural network, it undergoes a series of mathematical operations that are collectively called forward propagation. These operations produce an output, which is then compared to the desired output.

The network learns by adjusting the weights in a process called backpropagation to minimize the difference between the actual and desired output.

Now let’s take a look at the different types of neural networks that are used in machine learning applications.

Main Types of Neural Networks

There are several types of neural networks that are used for various applications. We’ll look at some of the most commonly used neural networks.

- Feedforward Neural Networks

- Convolutional Neural Networks

- Recurrent Neural Networks

- Autoencoder Neural Networks

- Generative Adversarial Networks

- Deep Belief Networks

- Radial Basis Function Networks

- Echo State Networks

- Feedforward Neural Networks (FNN)

A feedforward neural network is the simplest and most common type of neural network. Information flows in one direction, from the input layer, through the hidden layers (if any), and finally to the output layer.

There are no loops or cycles in the network, meaning the output of each layer serves as the input to the next layer without any feedback.

These networks are mainly used for supervised learning tasks, where the input data is labeled, and the network is trained to produce the correct output for a given input.

- Convolutional Neural Networks (CNN)

Convolutional neural networks are a specialized type of feedforward network designed for processing and analyzing spatial data, such as images.

They are widely used in computer vision tasks.

CNNs are made up of multiple layers, including convolutional, pooling, and fully connected layers.

The convolutional layers apply filters to the input data to extract local features, and the pooling layers downsample the data to reduce its dimensionality and make it more manageable.

These layers are typically followed by one or more fully connected layers that process the extracted features and produce the final output.

CNNs are capable of automatically learning features from the input data, making them highly effective in tasks such as image recognition and object detection.

They are also used in tasks like video analysis and natural language processing.

- Recurrent Neural Networks (RNN)

Recurrent neural networks are a type of neural network architecture designed for sequential data, where the order of the data points matters.

These networks have connections that form directed cycles, allowing them to maintain a memory of previous inputs and process sequences of data.

RNNs are widely used in natural language processing, speech recognition, and other tasks involving sequential data.

They have the ability to work with data of any size and are known for their good performance in handling tasks like handwriting recognition and speech synthesis.

One of the main drawbacks of RNNs is that they can have difficulty learning long-range dependencies due to the vanishing gradient problem.

This issue arises when training deep networks, where the gradients become increasingly small as they are backpropagated through the network, making it difficult to learn from distant connections.

To address this problem, several RNN variants have been developed, such as the Long Short-Term Memory (LSTM) networks and Gated Recurrent Units (GRU), which are designed to maintain long-term memory and mitigate the vanishing gradient problem.

- Long Short-Term Memory Networks (LSTM)

Long Short-Term Memory (LSTM) networks are a type of recurrent neural network architecture that was specifically designed to address the vanishing gradient problem in traditional RNNs.

They have an internal state that can store information over long sequences, making them well-suited for tasks involving long-range dependencies and sequence modeling.

LSTM networks are widely used in various natural language processing tasks, such as language modeling, machine translation, and text generation.

They have also been successfully applied to time series data analysis, speech recognition, and other tasks that require capturing long-term dependencies.

LSTM networks are composed of several specialized units called memory cells, which are responsible for storing and updating the internal state over time.

Each memory cell has three main components:

- Cell State: This represents the internal memory of the cell and is responsible for maintaining information over long sequences.

- Input Gate: This controls the flow of new information into the cell state. It decides which information from the current input should be stored in the cell state.

- Output Gate: This controls the flow of information from the cell state to the output of the LSTM. It decides which information from the cell state should be used to produce the output.

These components work together to allow the LSTM to learn when to store information, when to update it, and when to retrieve it, enabling it to effectively capture long-range dependencies.

- Gated Recurrent Units (GRU)

Gated Recurrent Units (GRUs) are a type of recurrent neural network architecture that was designed as a simpler alternative to LSTM networks.

They are also specifically designed to address the vanishing gradient problem and are widely used in tasks involving sequential data, such as natural language processing and time series analysis.

GRUs are similar to LSTMs in that they also have an internal state that can store information over time, but they have a more streamlined architecture with two main components:

- Update Gate: This controls the flow of information into the internal state. It decides how much of the new input should be integrated with the existing state.

- Reset Gate: This controls the flow of information from the internal state to itself. It decides which parts of the internal state should be updated or reset.

The main advantage of GRUs over LSTMs is their simpler architecture, which makes them easier to train and less computationally expensive.

However, LSTMs are still preferred in some cases due to their ability to capture more complex dependencies.

- Autoencoder Neural Networks

Autoencoder neural networks are a type of artificial neural network used for unsupervised learning.

They are designed to learn efficient representations of input data, typically for the purpose of dimensionality reduction or feature extraction.

Autoencoders consist of two main parts: an encoder and a decoder.

The encoder takes the input data and compresses it into a lower-dimensional representation, often called a latent space or code.

The decoder then takes this compressed representation and attempts to reconstruct the original input data.

The objective of an autoencoder is to minimize the difference between the input and the output, which encourages the network to learn a compact and informative representation of the data.

Autoencoders have various applications, including image denoising, anomaly detection, and unsupervised pretraining for other neural network architectures.

- Generative Adversarial Networks (GAN)

Generative Adversarial Networks (GANs) are a type of neural network architecture that is used for generative modeling. GANs consist of two neural networks: a generator and a discriminator.

The generator takes random noise as input and tries to generate realistic data, such as images or sounds.

The discriminator, on the other hand, takes both real data and generated data as input and tries to distinguish between them.

The two networks are trained simultaneously, with the generator attempting to produce data that is indistinguishable from real data, and the discriminator trying to improve its ability to differentiate between real and generated data.

This competition between the two networks results in the generator learning to produce increasingly realistic data.

GANs have been used for a wide range of applications, including image generation, image-to-image translation, and video synthesis.

- Deep Belief Networks (DBN)

Deep Belief Networks (DBNs) are a type of artificial neural network that consists of multiple layers of latent variables, with connections between variables in adjacent layers.

DBNs are based on a hierarchical, generative model and are typically composed of a layer of visible variables and multiple layers of hidden variables.

These networks are often used for unsupervised learning tasks, such as dimensionality reduction and feature learning.

The hidden variables in DBNs capture correlations in the data, and the connections between variables allow the network to learn complex, hierarchical representations of the input.

DBNs are trained using a technique called “greedy layer-wise pretraining,” which involves training each layer in the network separately and then fine-tuning the entire network.

This pretraining process helps to initialize the network’s parameters in a way that facilitates learning in the deeper layers.

DBNs have been successfully applied to a variety of tasks, including image and speech recognition, natural language processing, and bioinformatics.

- Radial Basis Function Networks (RBF)

Radial Basis Function Networks (RBFNs) are a type of artificial neural network that is particularly well-suited for function approximation and classification tasks.

RBFNs are typically composed of three layers: an input layer, a hidden layer with radial basis functions, and an output layer.

The hidden layer of RBFNs contains units with radial basis functions, which are used to compute the similarity between the input data and prototype vectors.

These similarity values are then passed through a transfer function to produce the hidden layer activations, which are used to compute the output of the network.

RBFNs are often trained using a combination of unsupervised and supervised learning methods.

Unsupervised learning is used to determine the prototype vectors in the hidden layer, while supervised learning is used to adjust the connections between the hidden and output layers.

RBFNs have been successfully applied to a wide range of tasks, including function approximation, regression, classification, and time series prediction.

Their ability to model complex, nonlinear relationships makes them well-suited for tasks that require high accuracy and robustness.

- Echo State Networks

Echo State Networks (ESNs) are a type of recurrent neural network architecture that has a fixed, randomly initialized hidden layer with sparse connectivity and a trainable readout layer.

ESNs are typically used for tasks involving temporal data, such as time series prediction and signal processing.

The hidden layer of an ESN contains a large number of units, each with a nonlinear activation function.

The key property of ESNs is that the connections between the hidden layer units have a specific structure: the units are sparsely connected, and the connections are randomly initialized and kept fixed during training.

This fixed structure allows ESNs to capture the temporal dynamics of the input data while avoiding the vanishing gradient problem that is often encountered in traditional RNNs.

The readout layer of an ESN is trained using a linear regression method to produce the desired output.

During training, the weights of the readout layer are adjusted to minimize the error between the predicted and actual outputs.

The readout layer can be trained using a variety of methods, such as ordinary least squares, ridge regression, or LASSO.

ESNs have been successfully applied to a wide range of tasks, including speech recognition, music composition, and chaotic time series prediction.

Their simple architecture and training procedure make them easy to implement and train, and they are particularly well-suited for tasks involving high-dimensional input data and long time series.

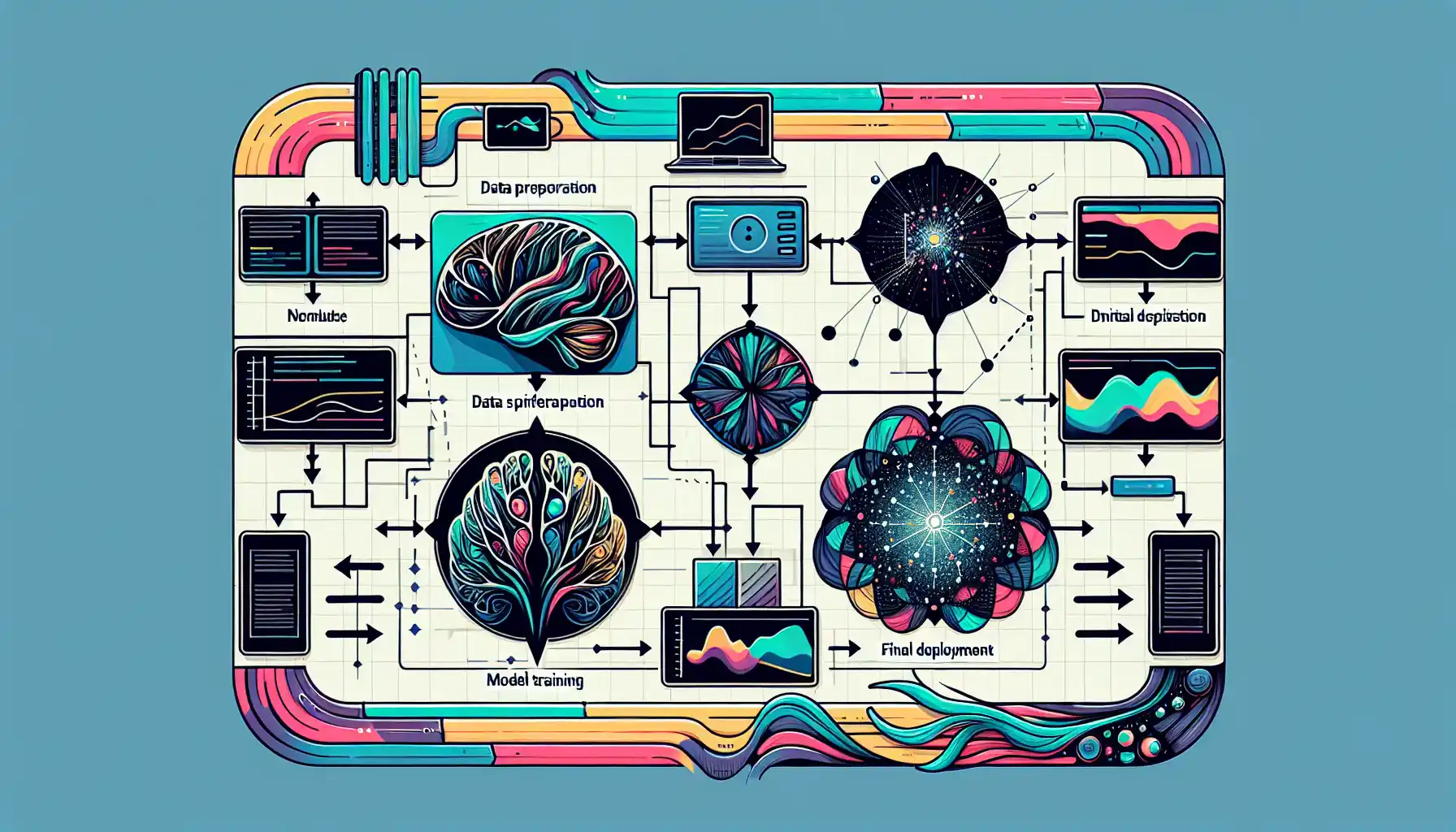

How to Use Neural Networks in Practice

To use neural networks in practice, you will need to follow the steps given below:

- Select a Network Type: Choose the appropriate type of neural network based on your problem, whether it’s a simple feedforward network for basic classification or a more complex architecture like a CNN or RNN for tasks like image recognition or natural language processing.

- Gather and Preprocess Data: Gather a large dataset that is relevant to your problem and preprocess it. This may include tasks like normalization, feature scaling, and splitting the data into training and testing sets.

- Choose an Activation Function: Choose an appropriate activation function for your network, such as ReLU for hidden layers and sigmoid or softmax for output layers depending on the type of problem (binary classification or multi-class classification).

- Select Hyperparameters: Choose the hyperparameters of your network, such as learning rate, number of hidden layers, number of neurons in each layer, and batch size. These hyperparameters can greatly influence the performance of your network.

- Train the Network: Train your network on the training data using an optimization algorithm like gradient descent. This involves feeding the input data forward through the network, computing the error or loss, and then using backpropagation to adjust the weights in the network.

- Evaluate and Tune: Once the network is trained, evaluate its performance on the test data and tune the hyperparameters if necessary. This may involve adjusting the learning rate, trying different optimization algorithms, or changing the network architecture.

- Deploy the Model: Once you are satisfied with the performance of your network, you can deploy it to make predictions on new, unseen data.

- Continuously Monitor and Update: Neural networks can be sensitive to changes in the data, so it’s important to continuously monitor their performance and update them as needed.

By following these steps, you can effectively use neural networks in practice and harness their power for a wide range of applications.

Final Thoughts

Neural networks are a powerful tool that have revolutionized the field of artificial intelligence and machine learning. Understanding the different types of neural networks allows you to choose the most appropriate architecture for your specific problem.

As you delve deeper into the world of neural networks, you’ll find that different architectures excel in different tasks. Whether it’s the image recognition prowess of CNNs, the sequential data processing capabilities of RNNs, or the unsupervised learning capabilities of autoencoders, each type of neural network has its own strengths and weaknesses.

We hope this article has helped you gain a better understanding of the different types of neural networks and how they can be applied in real-world scenarios.

Frequently Asked Questions

In this section, you’ll find some frequently asked questions that you may have when working with neural networks.

What are the types of neural networks?

The most common types of neural networks include:

- Feedforward neural networks

- Convolutional neural networks

- Recurrent neural networks

- Long Short-Term Memory networks

- Gated Recurrent Units

- Autoencoder neural networks

- Generative Adversarial Networks

- Deep Belief Networks

- Radial Basis Function Networks

What are the different types of feedforward networks?

Feedforward networks include multi-layer perceptrons (MLPs) and single-layer perceptrons. MLPs consist of an input layer, one or more hidden layers, and an output layer.

What is the most common type of neural network?

The most common type of neural network is the multi-layer perceptron (MLP), a type of feedforward network. MLPs are used in various applications, including classification, regression, and pattern recognition.

What are the three main types of neural networks?

The three main types of neural networks are:

- Feedforward neural networks

- Recurrent neural networks

- Convolutional neural networks

What is the simplest neural network?

The simplest neural network is the single-layer perceptron, which consists of one input layer and one output layer.

How many types of neural networks are there?

There are various types of neural networks, including feedforward neural networks, recurrent neural networks, convolutional neural networks, and more.

Each type is designed to solve specific types of problems and has its own unique structure and learning algorithms.