Introduction to Time Series Analysis

Time series analysis is a statistical method that deals with data points collected at constant intervals of time. It is used to predict future events based on past data. It is a powerful tool in the data scientist’s toolbox, as it enables the discovery of trends and patterns within data sets.

To work through a detailed project on this particular topic you can review the R related Workout below

R Workout – Time Series Analysis of Stock Market Data

Time series analysis involves multiple steps:

- Visualization: Understanding the data pattern and data quality. The visual presentation of the data provides valuable insights and helps in selecting appropriate forecasting models.

- Modeling: After data visualization, the next step is to build a model using the data. Different models can be used for time series analysis, including ARIMA (AutoRegressive Integrated Moving Average), Exponential Smoothing, and GARCH (Generalized Autoregressive Conditional Heteroskedasticity). The selection of a model depends on the characteristics of the data.

- Evaluation and Validation: The performance of the model is evaluated using statistical measures such as Mean Absolute Error (MAE), Mean Squared Error (MSE), and Root Mean Squared Error (RMSE). The accuracy of the model is determined by comparing the forecasted values with the actual values. If the model is accurate, it can be used for making predictions.

- Prediction: Once the model is built and validated, it can be used to make predictions about future data points. This is the most important part of time series analysis, as it helps in making informed decisions based on historical data.

This article will introduce you to time series analysis, a method that deals with data points collected at constant intervals of time. It is a powerful tool in the data scientist’s toolbox, as it enables the discovery of trends and patterns within data sets.

Let’s dive in!

What is Time Series Analysis?

Time series analysis is a statistical method that deals with data points collected at constant intervals of time. It is used to predict future events based on past data. It is a powerful tool in the data scientist’s toolbox, as it enables the discovery of trends and patterns within data sets.

Understanding Time Series Data

In this section, we’ll talk about time series data, its components, and why it’s important to understand them.

What is Time Series Data?

Time series data is a sequence of observations collected at regular intervals over time. It is a crucial form of data, and it is often used in a wide range of applications, including economics, finance, and environmental science.

In time series data, each observation is dependent on the previous ones, making it distinct from cross-sectional data, where each observation is independent.

Components of Time Series Data

There are four components of time series data: trend, seasonality, cycles, and irregularities. Understanding these components is essential for accurate analysis and prediction.

1. Trend

A trend is a long-term increase or decrease in data over time. It represents the underlying pattern of the data. Trends can be linear, meaning they increase or decrease at a constant rate, or they can be nonlinear, meaning the rate of change varies over time.

Trends help in understanding the overall direction of the data and are crucial for making long-term predictions.

2. Seasonality

Seasonality refers to patterns in the data that occur at regular intervals, typically within a year or less. These patterns can be caused by various factors, such as weather, holidays, or cultural events.

Seasonality is important to identify, as it can cause fluctuations in the data that are not related to the underlying trend. If not accounted for, these fluctuations can lead to inaccurate predictions.

3. Cycles

Cycles are similar to seasonality but occur over longer periods, typically several years or more. They represent repeating patterns in the data that are not necessarily tied to specific calendar dates.

Economic cycles, for example, can lead to periods of boom and bust in financial data. Identifying cycles is important for understanding the full range of variability in the data and can help in making predictions about future events.

4. Irregularities

Irregularities, also known as noise or residuals, are random fluctuations in the data that are not explained by the other components. They can be caused by a variety of factors, such as random events, measurement error, or unexpected changes in the system being studied.

While irregularities can make it more challenging to analyze and predict the data, they also provide valuable information. By understanding the nature of these fluctuations, one can better assess the reliability of predictions and the potential for future events.

Why Are Time Series Components Important?

Identifying and understanding the components of time series data is crucial for several reasons:

- Accurate Analysis: Separating the data into its constituent parts allows for a more accurate analysis. By recognizing the underlying trend, the presence of seasonality, and any other relevant patterns, one can make better-informed decisions.

- Better Predictions: Once the components are identified, it becomes easier to predict future trends. Understanding how the data has behaved in the past allows for more accurate forecasting.

- Intervention Analysis: By identifying the components, one can better understand the impact of external events on the data. For example, if there is a sudden spike in sales, one can determine whether it is due to seasonality or some other factor.

- Anomaly Detection: Recognizing irregularities is essential for detecting anomalies. This can be crucial in various fields, such as finance, where it is essential to identify unusual behavior in the data that may signal potential problems.

In conclusion, understanding the components of time series data is vital for accurate analysis and informed decision-making. It enables better predictions, facilitates intervention analysis, and helps in anomaly detection.

How to Visualize Time Series Data

In this section, we’ll explore the best methods for visualizing time series data. We’ll discuss the advantages of time series plots and box plots, how to use them, and why they’re useful for time series analysis.

What is Time Series Data Visualization?

Time series data visualization is a process of representing the data in a graphical format to help identify patterns, trends, and anomalies. This helps in better understanding the underlying structure of the data.

Why Use Time Series Data Visualization?

Time series data visualization is important because it helps you:

- Identify patterns and trends: Visualizing time series data allows you to see patterns and trends that are not immediately apparent from the raw data.

- Spot anomalies and outliers: Visualizing data helps you identify anomalies and outliers in the data that may require further investigation.

- Make informed decisions: Visualizing time series data helps you make informed decisions based on the data, rather than relying solely on intuition.

How to Visualize Time Series Data

There are several ways to visualize time series data, including line plots, scatter plots, histograms, and box plots. Each type of visualization has its own strengths and weaknesses, and the choice of visualization method will depend on the specific characteristics of the data you are working with.

In this section, we’ll focus on line plots and box plots, which are commonly used for time series data.

1. Line Plots

A line plot is a type of chart that displays information as a series of data points connected by straight line segments. It is particularly useful for visualizing how a single variable changes over time.

To create a line plot, follow these steps:

- Choose an appropriate time interval for your data (e.g., daily, weekly, monthly).

- Plot the data on the y-axis.

- Connect the data points with straight lines.

- Label the x-axis with the time interval you chose in step 1.

- Label the y-axis with the variable you are plotting.

2. Box Plots

A box plot, also known as a box-and-whisker plot, is a way to visualize the distribution of a dataset and display potential outliers.

To create a box plot, follow these steps:

- Calculate the 25th percentile (Q1), median, and 75th percentile (Q3) of your dataset.

- Determine the lower fence (Q1 – 1.5 * IQR) and upper fence (Q3 + 1.5 * IQR), where IQR is the interquartile range (Q3 – Q1).

- Plot a box from Q1 to Q3, with a line at the median.

- If there are any values outside the lower or upper fence, plot them as individual points (outliers).

- Label the x-axis with the variable you are plotting.

- Label the y-axis with the unit of measurement for the variable.

What is Time Series Forecasting?

Time series forecasting is the process of predicting future data points based on historical data. It is used in various fields, including finance, weather forecasting, and sales forecasting.

In this section, we’ll discuss the main goals of time series forecasting and its practical applications.

How Does Time Series Forecasting Work?

Time series forecasting works by analyzing past data to identify patterns and trends that can be used to predict future values.

Main Goals of Time Series Forecasting

The main goals of time series forecasting are:

- Predict future values: The primary goal of time series forecasting is to make accurate predictions of future values of the variable of interest. This can be used for planning, decision-making, and risk assessment.

- Understand underlying patterns: Time series forecasting helps in understanding the underlying patterns and trends in the data. This can provide valuable insights into the factors that influence the variable of interest.

- Assess uncertainty: Time series forecasting can quantify the uncertainty associated with the predictions. This helps in assessing the risk and making informed decisions.

Practical Applications of Time Series Forecasting

Time series forecasting has numerous practical applications in various fields, including:

- Finance: Time series forecasting is used in stock price prediction, foreign exchange rate prediction, and credit risk assessment.

- Economics: It is used to forecast economic indicators such as GDP, inflation, and unemployment rates.

- Weather forecasting: Time series forecasting is essential for predicting temperature, precipitation, and other weather variables.

- Sales forecasting: It is used to predict future sales, customer demand, and inventory requirements.

- Healthcare: Time series forecasting is used in disease outbreak prediction, patient admission forecasting, and medical resource allocation.

- Engineering: It is used in predicting equipment failure, energy consumption, and maintenance scheduling.

In conclusion, time series forecasting is a powerful tool for predicting future values of a variable based on historical data. Its main goals are to make accurate predictions, understand underlying patterns, and assess uncertainty. It has numerous practical applications in various fields and is an essential part of data science and machine learning.

How to Conduct Time Series Analysis

In this section, we’ll explore how to conduct time series analysis. We’ll discuss the main steps involved in time series analysis and some popular methods for forecasting and modeling time series data.

Steps in Time Series Analysis

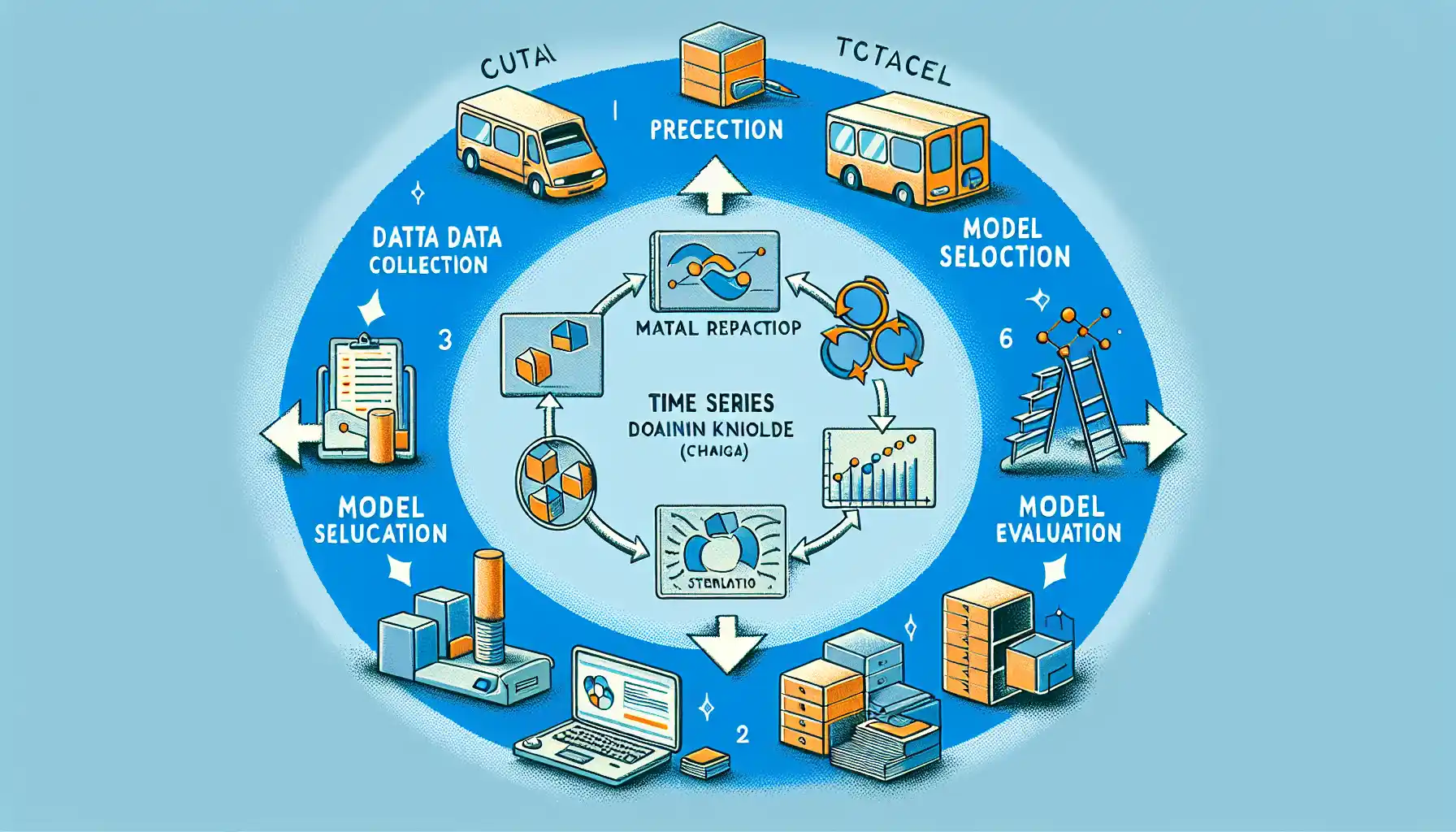

The main steps in time series analysis are:

- Data collection: Gather historical data on the variable of interest, typically collected at regular intervals (e.g., daily, weekly, monthly).

- Data preprocessing: Clean the data, handle missing values, and transform it if necessary (e.g., log transformation, differencing).

- Visualization: Plot the data to identify patterns, trends, and seasonality.

- Model selection: Choose an appropriate forecasting model based on the characteristics of the data.

- Model training: Fit the model to the historical data to learn the underlying patterns.

- Model evaluation: Assess the accuracy of the model by comparing the predictions with the actual values.

- Forecasting: Use the trained model to make predictions about future values of the variable.

- Model refinement: Fine-tune the model if necessary to improve its accuracy.

Common Techniques in Time Series Analysis

Some common techniques in time series analysis include:

- Autoregressive Integrated Moving Average (ARIMA): A popular model for time series forecasting that takes into account the autocorrelation in the data.

- Exponential Smoothing (ES): A simple and widely used method for time series forecasting that assigns exponentially decreasing weights to past observations.

- Seasonal Decomposition of Time Series (STL): A method for decomposing a time series into its trend, seasonal, and residual components.

- Machine Learning Models: Techniques such as regression, decision trees, and neural networks can be used for time series analysis.

How to Forecast and Model Time Series Data

Forecasting and modeling time series data involves selecting an appropriate model and using it to make predictions about future values of the variable.

The key steps are:

- Selecting a model: Choose a suitable forecasting model based on the characteristics of the data.

- Training the model: Fit the model to the historical data to learn the underlying patterns.

- Making predictions: Use the trained model to forecast future values of the variable.

- Model evaluation: Assess the accuracy of the model by comparing the predictions with the actual values.

- Model refinement: Fine-tune the model if necessary to improve its accuracy.

- Forecasting: Use the trained model to make predictions about future values of the variable.

- Model refinement: Fine-tune the model if necessary to improve its accuracy.

Examples of Time Series Analysis in Action

Let’s take a look at a couple of examples of time series analysis in action.

1. Stock Price Forecasting

In stock price forecasting, time series analysis is used to predict future stock prices based on historical data.

2. Sales Forecasting

In sales forecasting, time series analysis is used to predict future sales based on historical data.

Additional Thoughts

Time series analysis is a valuable tool in the data scientist’s toolkit, as it allows us to gain insights and make predictions from data collected over time. Whether you’re working with financial data, weather patterns, or stock prices, time series analysis can help you understand the past and anticipate the future.

From understanding data components to making informed decisions, time series analysis equips you with the tools to extract valuable insights from your data.

Time Series Analysis For Stock Prices

Time series analysis involves understanding, modeling, and forecasting sequential data points collected over time. In the context of stock prices, historical stock data fluctuates over time, making it a prime candidate for time series analysis.

Key Concepts

1. Time Series Components

- Trend: The long-term progression in the data (e.g., an overall increase in stock prices over time).

- Seasonality: The repeating short-term cycle in the data (e.g., monthly or quarterly patterns).

- Noise: Random variations in the data that are not explained by the model.

2. Stationarity

A time series is stationary if its properties do not depend on the time at which the series is observed. In other words, the mean, variance, and autocorrelation structure do not change over time. Stationarity is a prerequisite for many time series models, including ARIMA.

Practical Setup Instructions

1. Data Collection

Collect historical stock price data. This typically includes columns such as Date, Open, High, Low, Close, and Volume.

Date,Open,High,Low,Close,Volume

2023-01-01,100,110,90,105,1000

2023-01-02,106,115,95,110,1200

...

2. Exploratory Data Analysis (EDA)

Perform EDA to understand the dataset better.

Plotting the Time Series: Visualize the data to identify trends and seasonality.

plot(time_series_data, title="Stock Prices Over Time", xlabel="Date", ylabel="Price")Summary Statistics: Calculate mean, variance, and other descriptive statistics.

mean = calculate_mean(time_series_data)

variance = calculate_variance(time_series_data)

print("Mean:", mean, "Variance:", variance)

3. Stationarity Testing

Use statistical tests, like the Augmented Dickey-Fuller (ADF) test, to check for stationarity.

result = adf_test(time_series_data['Close'])

print("ADF Statistic:", result['statistic'])

print("p-value:", result['p_value'])

if result['p_value'] < 0.05:

print("The data is stationary")

else:

print("The data is not stationary, differencing is needed")

4. Differencing

If the data is not stationary, apply differencing to make it stationary.

# First-order differencing

differenced_data = difference(time_series_data['Close'])

5. ARIMA Model

Model Identification

Identify the parameters p, d, q using methods like autocorrelation function (ACF) and partial autocorrelation function (PACF).

# Identifying p and q using ACF and PACF plots

plot_acf(differenced_data)

plot_pacf(differenced_data)

Model Fitting

Fit the ARIMA model using identified parameters.

arima_model = ARIMA(differenced_data, order=(p, d, q))

fitted_model = arima_model.fit()

Model Diagnostics

Evaluate the model to ensure it appropriately fits the data.

# Residual analysis

plot_residuals(fitted_model.residuals)

check_residuals_autocorrelation(fitted_model.residuals)

6. Forecasting

Use the fitted ARIMA model to make predictions.

forecast_steps = 30 # Number of days to forecast

forecast = fitted_model.forecast(steps=forecast_steps)

# Plot the forecast

plot_forecast(forecast, title="Stock Price Forecast", xlabel="Date", ylabel="Price")

Conclusion

By following these steps, you can collect, analyze, and forecast stock prices using historical data and ARIMA models. Time series analysis is a powerful tool for understanding and predicting future trends in stock markets.

Data Import and Preprocessing

Data Import

# Algorithmic steps:

1. Initialize connection to data source (File, API, Database).

2. Load historical stock data (open, close, high, low, volume).

3. Ensure data is in a uniform format (e.g., DataFrame with DateTime index).

# Example in Pseudocode:

initializeConnection(dataSource)

data = loadData(dataSource)

ensureUniformFormat(data)

Preprocessing

Handle Missing Values

1. Identify missing values in the dataset.

2. Decide on a strategy (drop, fill forward, fill backward, interpolate).

3. Apply the chosen strategy.

# Example Pseudocode:

if data.has_missing_values():

data = data.interpolate() # Example strategy: linear interpolation

Convert Data Types

1. Ensure date column is in DateTime format.

2. Ensure all numerical columns (open, close, high, low, volume) are in float format.

# Example Pseudocode:

data.datetime_column = convertToDateTime(data.datetime_column)

data[numerical_columns] = convertToFloat(data[numerical_columns])

Feature Engineering

Create Additional Time-Based Features

1. Extract year, month, day, day_of_week from DateTime index.

2. Create lag features if necessary (e.g., previous day's close).

# Example Pseudocode:

data['year'] = getYear(data.datetime_column)

data['month'] = getMonth(data.datetime_column)

data['day'] = getDay(data.datetime_column)

data['day_of_week'] = getDayOfWeek(data.datetime_column)

# Lag features

data['previous_close'] = getLag(data['close'], lag=1)

Normalize/Scale the Data

1. Choose a scaler (e.g., MinMaxScaler, StandardScaler).

2. Apply the scaler to numerical columns.

# Example Pseudocode:

scaler = initializeScaler('MinMaxScaler')

data[numerical_columns] = applyScaler(scaler, data[numerical_columns])

Split Data into Training and Testing Sets

1. Define split ratio (70-30, 80-20, etc.).

2. Split the data into train and test datasets.

# Example Pseudocode:

split_ratio = 0.8

train_data, test_data = splitData(data, split_ratio)

Pseudocode for ARIMA Preprocessing

# Full Pseudocode Implementation:

initializeConnection(dataSource)

data = loadData(dataSource)

ensureUniformFormat(data)

if data.has_missing_values():

data = data.interpolate()

data.datetime_column = convertToDateTime(data.datetime_column)

data[numerical_columns] = convertToFloat(data[numerical_columns])

data['year'] = getYear(data.datetime_column)

data['month'] = getMonth(data.datetime_column)

data['day'] = getDay(data.datetime_column)

data['day_of_week'] = getDayOfWeek(data.datetime_column)

data['previous_close'] = getLag(data['close'], lag=1)

scaler = initializeScaler('MinMaxScaler')

data[numerical_columns] = applyScaler(scaler, data[numerical_columns])

split_ratio = 0.8

train_data, test_data = splitData(data, split_ratio)

Conclusion

You now have a complete guide to import and preprocess your historical stock data in preparation for time series analysis and ARIMA modeling. This pseudocode can be translated into your preferred programming language easily by replacing the pseudocode functions with actual implementations.

You can easily use Data Mentor for this.

Step 3: Analyze and Predict Stock Prices Using ARIMA Models

ARIMA Model Explanation

An ARIMA (AutoRegressive Integrated Moving Average) model is a popular method used for time series forecasting. It combines three components:

- Autoregressive (AR) part: This involves regressing the variable on its own lagged values.

- Integrated (I) part: This is primarily used to make the time series stationary by subtracting values from previous periods (differencing).

- Moving Average (MA) part: This incorporates the dependency between an observation and a residual error from a moving average model applied to lagged observations.

The order of an ARIMA model is generally denoted as ARIMA(p,d,q), where:

- p = number of lag observations included in the model (AR part).

- d = number of times differencing to make the series stationary.

- q = size of the moving average window (MA part).

Implementing ARIMA for Stock Price Prediction

Identify the Stationarity of the Data

- Check and transform the data if necessary to make it stationary using differencing.

Fit the ARIMA Model

- Fit the ARIMA model using the transformed data.

Make Predictions

- Use the trained ARIMA model to forecast.

Pseudocode Implementation

Here is the pseudocode for implementing ARIMA to predict stock prices:

load historical_stock_data

# Step 1: Check for stationarity

def is_stationary(series):

result = adfuller(series) # Using Augmented Dickey-Fuller Test

return result.p-value < 0.05 # If p-value < 0.05, series is stationary

# Step 2: Differencing to make data stationary if necessary

if not is_stationary(stock_data):

stock_data_diff = difference(stock_data) # Differencing the series

# Step 3: Fit ARIMA Model

model = ARIMA(stock_data_diff, order=(p, d, q))

model_fitted = model.fit()

# Step 4: Forecasting

forecast = model_fitted.forecast(steps=forecast_horizon)

# Inverting the transformation from stationary to original scale if differencing was applied

if d > 0:

forecast = invert_difference(stock_data, forecast)

return forecast

Example in R

For those who prefer a specific implementation, here’s how you can achieve this in R using the forecast library:

# Load required library

library(forecast)

# Load the historical stock data

stock_data <- ts(historical_stock_data$Price, frequency = 252) # assuming daily data with 252 trading days per year

# Step 1: Check for stationarity using Augmented Dickey-Fuller test

adf_test <- adf.test(stock_data)

if (adf_test$p.value > 0.05) {

# Step 2: Differencing the data

stock_data_diff <- diff(stock_data, differences = 1)

} else {

stock_data_diff <- stock_data

}

# Step 3: Fit the ARIMA model

fit <- auto.arima(stock_data_diff)

# Step 4: Forecasting

future_forecast <- forecast(fit, h=30) # Predicting for the next 30 days

# Restoring the forecast to the original scale

if (is.differenced(stock_data)) {

future_forecast$mean <- diffinv(future_forecast$mean, lag=1, differences=1)

}

# Display the forecast

plot(future_forecast)

Explanation

- adf.test(series): Tests the null hypothesis that a unit root is present in the time series sample. A stationary series does not contain a unit root.

- diff: Computes the difference between consecutive observations, used to achieve stationarity if required.

- auto.arima: Automatically fits the ARIMA model to the data.

- forecast: Projects future values using the fitted ARIMA model.

- diffinv: Inverts differencing to get predictions on the original scale.

By following these steps precisely, you will be able to analyze and predict stock prices using ARIMA models effectively.

Step 4: Identifying Trends in Time Series Data

Objective

Implement techniques to identify trends in historical stock data and fit ARIMA models to predict future stock prices.

Identifying Trends

To identify trends in time series data, you can use the following methodology:

- Decomposition: Decompose the time series into trend, seasonal, and residual components.

- Smoothing: Use moving averages to identify the underlying trend.

- Model-Based Approaches: Fit ARIMA models to capture and predict trends.

Decomposition

In pseudocode for general understanding:

time_series = load_stock_data()

# Decompose the time series

decomposition = decompose_series(time_series, model='additive')

trend_component = decomposition.trend

seasonal_component = decomposition.seasonal

residual_component = decomposition.residual

# Plot components

plot(time_series, trend_component, seasonal_component, residual_component)

Smoothing

Use moving averages to smooth the time series and reveal the trend:

window_size = 12 # For monthly average

moving_average = calculate_moving_average(time_series, window_size)

# Plot the original series and moving average

plot(time_series, moving_average)

ARIMA Model Fitting

Fit an ARIMA model to the time series data:

from statsmodels.tsa.arima.model import ARIMA

# Fit an ARIMA model

arima_model = ARIMA(time_series, order=(p, d, q)).fit()

# Summarize the model

print(arima_model.summary())

# Predict future values

forecast_steps = 12

forecast = arima_model.forecast(steps=forecast_steps)

# Plot the original series and the forecasted values

plot(time_series, forecast)

Explanation

decompose_series: Method to split the time series into trend, seasonality, and residuals using an additive or multiplicative model.calculate_moving_average: Function to compute the moving average, smoothing the time series to reveal trends.ARIMA(time_series, order=(p, d, q)): Utilize the ARIMA model, where (p) is the autoregressive order, (d) is the degree of differencing, and (q) is the moving average order.forecast: Predict future values based on the fitted ARIMA model.

Application

Implementations of these methods should be integrated into your time series analysis pipeline. Upon fitting the ARIMA model, utilize historical stock data to forecast future prices, enabling you to make data-driven investment decisions. Adjust the parameters (p), (d), and (q) to refine your model for improved accuracy.

This concludes the practical implementation for identifying trends in historical stock data and using ARIMA models for prediction.

Seasonal and Cyclic Patterns Analysis

Objective: Analyze and predict stock prices using historical stock data with time series analysis techniques and ARIMA models.

Identify Seasonal Patterns

Seasonal patterns are repetitive fluctuations that occur at regular intervals, e.g., monthly or yearly. They can be observed via techniques like Decomposition.

Decomposition

- Additive Model: Observed = Trend + Seasonal + Residual

- Multiplicative Model: Observed = Trend * Seasonal * Residual

Steps

load historical_stock_data()

# Decomposition using Additive Model

decomposed_data = decompose_time_series(historical_stock_data, model='additive')

# Extract Seasonal Component

seasonal_component = decomposed_data.seasonal

# Plot Seasonal Component to visualize seasonal patterns

plot(seasonal_component)

Identify Cyclic Patterns

Cyclic patterns occur at irregular intervals and are not as predictable as seasonal patterns. Cycle detection involves identifying long-term trends and deviations.

Using Moving Averages

# Calculate moving averages to smooth out short-term fluctuations

window_size = define_window_size(historical_stock_data)

smoothed_series = moving_average(historical_stock_data, window_size)

# Detection of cycles: Identify points where the smoothed series deviations are significant

# Points where the derivative changes sign (i.e., local minima/maxima)

cyclic_trends = detect_cyclic_trends(smoothed_series)

# Plot Cyclic Trends to visualize

plot(cyclic_trends)

ARIMA Model for Prediction

ARIMA (AutoRegressive Integrated Moving Average) models are used to predict future stock prices based on the identified patterns.

Steps for ARIMA Modeling

# Fit the ARIMA model

order = select_order_parameters(historical_stock_data) # typically (p,d,q) values

arima_model = fit_arima_model(historical_stock_data, order)

# Forecast future prices

forecasted_values = arima_model.forecast(steps=prediction_length)

# Plot Forecasted values to visualize prediction

plot_with_confidence_intervals(forecasted_values, confidence_level=0.95)

Implementation Breakdown

function analyze_and_predict_stock_prices(historical_stock_data):

# Step 1: Decomposition to identify Seasonal Patterns

decomposed_data = decompose_time_series(historical_stock_data, model='additive')

seasonal_component = decomposed_data.seasonal

plot(seasonal_component)

# Step 2: Smoothing and Cyclic Patterns Detection

window_size = define_window_size(historical_stock_data)

smoothed_series = moving_average(historical_stock_data, window_size)

cyclic_trends = detect_cyclic_trends(smoothed_series)

plot(cyclic_trends)

# Step 3: ARIMA Model Fitting

order = select_order_parameters(historical_stock_data)

arima_model = fit_arima_model(historical_stock_data, order)

# Step 4: Forecasting

forecasted_values = arima_model.forecast(steps=prediction_length)

plot_with_confidence_intervals(forecasted_values, confidence_level=0.95)

return forecasted_values

# Assuming you have the data loaded and preprocessed

historical_stock_data = load_historical_stock_data('path_to_data.csv')

predicted_prices = analyze_and_predict_stock_prices(historical_stock_data)

The above steps ensure that you analyze and predict stock prices effectively, using seasonal and cyclic patterns as part of a structured time series analysis process, followed by ARIMA modeling.

Introduction to ARIMA Models

Autoregressive Integrated Moving Average (ARIMA) models are widely used for forecasting time series data. These models are capable of capturing various structures in time series data, including trends and seasonality. This implementation guide will demonstrate how to fit an ARIMA model to stock price data and use it for forecasting.

Understanding ARIMA Components

ARIMA models are denoted as ARIMA(p, d, q), where:

- p is the number of lag observations included in the model (AR: autoregressive part).

- d is the number of times that the raw observations are differenced (I: integrated part).

- q is the size of the moving average window (MA: moving average part).

Step-by-Step Implementation

Step 1: Stationarize the Time Series

Ensure the time series data is stationary by differencing it to remove trends and seasonality.

# Pseudocode for differencing

differenced_series = original_series.diff(d).dropna()

Step 2: Identify ARIMA Parameters

You need to determine the appropriate values for p, d, and q. This can be done using statistical properties of the data (e.g., ACF and PACF plots).

Step 3: Fit the ARIMA Model

Once parameters p, d, and q are identified, fit the ARIMA model to the differenced series.

# Pseudocode for fitting ARIMA model

model = ARIMA(differenced_series, order=(p, d, q))

fitted_model = model.fit()

Step 4: Diagnostic Checks

Perform diagnostic checks to ensure the residuals of the model resemble white noise:

- Plot residuals

- Perform statistical tests like the Ljung-Box test

# Pseudocode for diagnostic checks

residuals = fitted_model.resid

# Plot residuals and perform Ljung-Box test (as an example)

plot(residuals)

ljung_box_result = ljung_box_test(residuals)

Step 5: Forecast

Generate forecasts using the fitted ARIMA model and transform the differenced series back to the original scale by reversing the differencing process.

# Pseudocode for forecasting

forecast_steps = 30 # Number of periods to forecast

forecasted_values = fitted_model.forecast(steps=forecast_steps)

# Reverse differencing to get forecast on the original scale

reversed_forecast = reverse_difference(forecasted_values, original_series)

Applying ARIMA to Stock Prices

- Ensure Stationarity: Use statistical tests like the Augmented Dickey-Fuller test to check stationarity.

- Select Parameters: Use information criteria such as AIC/BIC to select the best ARIMA model.

- Fit Model: Fit the selected ARIMA model to your stock price data.

- Forecast: Use the model to predict future stock prices and validate the forecast accuracy.

# Pseudocode for complete ARIMA application on stock data

stock_data = import_stock_data()

stationary_stock = make_stationary(stock_data)

p, d, q = select_parameters(stationary_stock)

arima_model = ARIMA(stationary_stock, order=(p, d, q))

fitted_model = arima_model.fit()

# Generate forecasts

forecast_steps = 30

forecasted_values = fitted_model.forecast(steps=forecast_steps)

forecasted_stock_prices = reverse_difference(forecasted_values, stock_data)

By following these steps, you can apply ARIMA models to stock price data for time series analysis and forecasting. Make sure to validate your model and interpret the forecasts in the context of the stock market dynamics.

Parameter Selection for ARIMA

When fitting an ARIMA model to historical stock price data, selecting the appropriate parameters (p, d, q) is crucial. The p parameter corresponds to the number of lag observations included in the model (autoregressive part), d represents the number of times that the raw observations are differenced to make the time series stationary, and q is the size of the moving average window.

Grid Search Method

Step 1: Split the Data

Before selecting parameters, the data should be split into training and testing sets to evaluate model performance.

# Assume `data` is your time series data

train_size = 0.8 * length(data)

train_data = data[0:train_size]

test_data = data[train_size:length(data)]

Step 2: Define the Grid Search

Create a grid of potential values for p, d, and q.

# Define the range for parameters p, d, q

p_values = [0, 1, 2, 3, 4]

d_values = [0, 1, 2]

q_values = [0, 1, 2, 3]

Step 3: Evaluate Models

Iterate through each combination of parameters using a nested loop, fit the ARIMA model, and calculate the error metrics.

# Define a function to calculate the mean squared error (MSE)

def calculate_mse(actual, predicted):

return sum((actual - predicted) ^ 2) / length(actual)

# Initialize variables to store the best score and corresponding parameters

best_score = infinity

best_params = (0, 0, 0)

# Nested loops to iterate through each combination of p, d, q

for p in p_values:

for d in d_values:

for q in q_values:

try:

# Fit ARIMA model to the training data

model = ARIMA(train_data, order=(p, d, q))

model_fit = model.fit()

# Forecast the test data

forecast = model_fit.forecast(steps=length(test_data))

# Calculate error using the test data

mse = calculate_mse(test_data, forecast)

# Check if we have found a new best model

if mse < best_score:

best_score = mse

best_params = (p, d, q)

except:

# Handle cases where ARIMA fitting may fail

continue

Step 4: Final Model Fitting

Once the best parameters are identified, fit the final model on the entire dataset.

# Fit the final ARIMA model on the entire dataset using the best parameters

final_model = ARIMA(data, order=best_params)

final_model_fit = final_model.fit()

# Perform predictions if needed

final_forecast = final_model_fit.forecast(steps=forecast_steps)

Step 5: Summary of Results

Output the best parameters and performance metrics.

print("Best Parameters: ", best_params)

print("Best Mean Squared Error: ", best_score)

With this setup, you can select the optimal parameters for the ARIMA model in a structured manner and apply it to analyze and predict stock prices based on historical data.

Forecasting Future Stock Prices

In this section, we’ll implement an ARIMA model for forecasting future stock prices based on historical data. We’ll focus on using the ARIMA class from a statistical library to fit and forecast the data.

Steps for Implementation

1. Fit the ARIMA model

First, import necessary libraries and fit the ARIMA model using the best parameters identified in the previous steps.

from statsmodels.tsa.arima.model import ARIMA

# Assuming `stock_data` is your preprocessed time series data

# Best parameters identified: p, d, q

p = 1

d = 1

q = 1

model = ARIMA(stock_data, order=(p, d, q))

fit_model = model.fit()

2. Forecast Future Values

Next, use the fitted model to forecast future stock prices. Here, we provide an example to forecast the next 30 steps (time periods).

forecast_steps = 30

forecast = fit_model.forecast(steps=forecast_steps)

3. Visualize the Results

Visualize the forecasting results to understand the potential future trends of the stock prices.

import matplotlib.pyplot as plt

# Original time series

plt.figure(figsize=(10, 5))

plt.plot(stock_data, label='Historical Data')

# Forecasted values

forecast_index = range(len(stock_data), len(stock_data) + forecast_steps)

plt.plot(forecast_index, forecast, color='red', label='Forecasted Data')

plt.xlabel('Time')

plt.ylabel('Stock Price')

plt.title('Stock Price Forecast')

plt.legend()

plt.show()

4. Evaluate the Model

Evaluate the model using appropriate metrics like Mean Absolute Error (MAE) or Mean Squared Error (MSE).

from sklearn.metrics import mean_squared_error

# Forecast the in-sample data to compare with actual data

in_sample_forecast = fit_model.predict(start=0, end=len(stock_data) - 1)

mse = mean_squared_error(stock_data, in_sample_forecast)

print(f'Mean Squared Error: {mse}')

Conclusion

This section provided a practical implementation of forecasting future stock prices using an ARIMA model. By fitting the model with historical stock data and visualizing the forecasted results, you are now able to predict future trends based on past patterns.

Model Evaluation Metrics: MAE and RMSE

To evaluate the performance of your ARIMA model in predicting stock prices, you can use the following metrics: Mean Absolute Error (MAE) and Root Mean Squared Error (RMSE). These metrics are useful to quantify the accuracy of your forecasts.

Mean Absolute Error (MAE)

MAE measures the average magnitude of the errors in a set of predictions, without considering their direction. It’s calculated as the average of the absolute errors between the predicted and actual values.

Formula:

[ \text{MAE} = \frac{1}{n} \sum_{i=1}^{n} | y_i – \hat{y}_i | ]

Implementation:

function calculateMAE(actual, predicted):

n = length(actual)

absolute_errors = 0

for i = 1 to n:

absolute_errors += abs(actual[i] - predicted[i])

MAE = absolute_errors / n

return MAE

Root Mean Squared Error (RMSE)

RMSE measures the square root of the average of the squared differences between prediction and actual observation. It’s useful to give a higher weight to larger errors.

Formula:

[ \text{RMSE} = \sqrt{ \frac{1}{n} \sum_{i=1}^{n} (y_i – \hat{y}_i)^2 } ]

Implementation:

function calculateRMSE(actual, predicted):

n = length(actual)

squared_errors = 0

for i = 1 to n:

squared_errors += (actual[i] - predicted[i])^2

RMSE = sqrt(squared_errors / n)

return RMSE

Example Usage with Time Series Data

Below is an example showing how to use the above functions with actual and predicted stock prices.

# Assuming you have two lists of actual and predicted stock prices

actual_prices = [100, 101, 102, 103, 104]

predicted_prices = [99, 102, 101, 104, 105]

# Calculate MAE

mae = calculateMAE(actual_prices, predicted_prices)

print("Mean Absolute Error: " + mae)

# Calculate RMSE

rmse = calculateRMSE(actual_prices, predicted_prices)

print("Root Mean Squared Error: " + rmse)

You can integrate the above implementations directly into your ARIMA model evaluation pipeline to measure the prediction’s performance effectively.

Visualizing Data and Forecast Results

Overview

This section provides practical steps to visualize the historical stock data and the forecasted stock prices using ARIMA models.

Steps

1. Load Historical Data and Forecast Results

Assuming you have already imported the necessary libraries and have two datasets:

historical_datawhich contains the historical stock prices.forecast_resultswhich contains the forecasted stock prices along with their respective dates.

Example Data Structure

historical_data:

| Date | Close |

|------------|--------|

| 2020-01-01 | 100.5 |

| 2020-01-02 | 101.4 |

| ... | ... |

forecast_results:

| Date | Forecast |

|------------|----------|

| 2021-01-01 | 150.2 |

| 2021-01-02 | 151.8 |

| ... | ... |

2. Plot Historical Data

You can use pseudocode to explain the process:

Load 'historical_data'

Plot 'Date' vs 'Close' as a line plot with label 'Historical Prices'

3. Plot Forecast Results

Similarly, to plot forecast results:

Load 'forecast_results'

Plot 'Date' vs 'Forecast' as a line plot with label 'Forecasted Prices'

4. Combine Historical and Forecast Data

Merge the data for a cohesive visual representation:

Concatenate 'historical_data' and 'forecast_results'

Plot 'Date' vs 'Close' from 'historical_data'

Plot 'Date' vs 'Forecast' from 'forecast_results' starting from the end of 'historical_data'

Add titles, labels, and legends for context

5. Pseudocode for Combined Plot Visualization

# Load datasets

historical_data = LoadHistoricalData()

forecast_results = LoadForecastResults()

# Create a plot

InitializePlot()

# Plot historical data

Plot 'historical_data.Date' vs 'historical_data.Close' as 'Historical Prices'

# Plot forecasted data

Plot 'forecast_results.Date' vs 'forecast_results.Forecast' as 'Forecasted Prices'

# Enhance plot with titles and labels

SetPlotTitle('Stock Prices: Historical and Forecasted')

SetXAxisLabel('Date')

SetYAxisLabel('Stock Price')

AddLegend(['Historical Prices', 'Forecasted Prices'])

# Show plot

DisplayPlot()

Note

- The above pseudocode is a general blueprint.

- Add specific implementation based on the tools or libraries in use.

Conclusion

Following this guide allows for the visualization of both historical and forecasted stock prices, providing a comprehensive view of stock price trends and predictions. This is crucial for analysis and interpretation in stock market research.

Frequently Asked Questions

What is the difference between time series and regression analysis?

Time series analysis deals with data points collected at regular intervals over time, focusing on the patterns and trends within the data. On the other hand, regression analysis examines the relationship between one or more independent variables and a dependent variable. The primary difference lies in the nature of the data, with time series focusing on temporal patterns and regression on variable relationships.

What are some common time series models?

Some common time series models include ARIMA (AutoRegressive Integrated Moving Average), SARIMA (Seasonal ARIMA), and Exponential Smoothing. These models are used to identify and predict patterns within time series data, accounting for factors like autocorrelation, seasonality, and trend.

How is time series analysis used in economics?

Time series analysis is extensively used in economics to analyze economic data collected over time. It helps economists identify trends, predict future outcomes, and understand the impact of various economic events. Examples include forecasting GDP growth, analyzing stock market trends, and assessing the impact of interest rate changes.

What are the assumptions of time series analysis?

Some key assumptions of time series analysis include:

- Stationarity: The data should have constant mean and variance over time.

- Autocorrelation: The relationship between data points should be independent of time.

- Homoscedasticity: The variance of the data should be consistent across time.

- Normality: The data should follow a normal distribution.

- Linearity: The relationship between data points should be linear.

How is time series analysis used in forecasting?

Time series analysis is used in forecasting by identifying patterns and trends in historical data and using them to predict future outcomes. This is achieved through the application of time series models, such as ARIMA, that take into account factors like seasonality, trend, and autocorrelation. These models can then be